When Insignificant Results are Significant

When running an A/B test, you typically want to see a clear winner. But that doesn’t always happen. Sometimes results are insignificant. And if you have a well-defined test hypothesis behind why you’re running the test in the first place, then an insignificant result can be just as useful as a clear winner. On optimizely.com,

When running an A/B test, you typically want to see a clear winner. But that doesn’t always happen. Sometimes results are insignificant. And if you have a well-defined test hypothesis behind why you’re running the test in the first place, then an insignificant result can be just as useful as a clear winner.

On optimizely.com, our Twitter module recently stopped working because of a change to the Twitter API, and fixing it turned out to be a non-trivial endeavor. So I began to wonder: will removing it altogether decrease our key metrics*? If not, we can safely remove the module altogether, saving developer time.

My hypothesis was that removing the Twitter module would make no significant difference in our key metrics, i.e. no clear winner or loser. In other words, the results would be insignificant. If this is true, then I can safely remove it without hurting conversions.

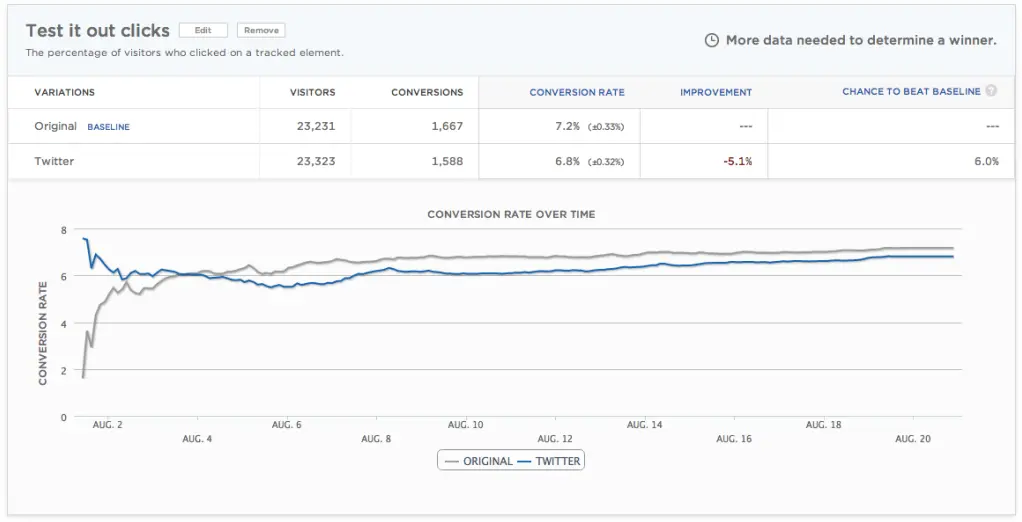

After running the test for a few weeks the results were clear: the Twitter module does not affect conversions (positively or negatively). That means it’s safe to remove, saving hours of re-implementation time. No more code to maintain, and less visual clutter on our homepage.

Results were not significant, but it was clear Twitter wasn’t increasing conversions.

The key takeaway here is that I formed a hypothesis upfront to explain why I’m testing this change, thus giving context to the results data. I knew that regardless of the results, I would gain actionable data. Had my experiment yielded a clear winner such as the presence of a Twitter module increases conversions, I would know it’s worth keeping on our site. If not, it can be safely removed. In this case, insignificant results were significant.

* Our key metrics are using the editor, signing up for an account, and viewing the pricing page.