For Insound, Testing the 15-Year-Old Search Algorithm Drives 39% Lift

Insound has witnessed a fascinating shift in music buying behavior over the years. Around 2005, vinyl LPs started selling at a greater rate. By 2007 they were outselling CDs. By 2008, they accounted for the majority of all sales. As sales migrated to a new (old) format Clearhead hypothesized that the search algorithm—which had been the same for 15 years—could be optimized. They were right.

Brian Cahak

As one of the very first music stores online, Insound had a front row seat to enormous change in Internet buying behavior, in general, and online music buying habits, in particular. Over nearly 15 years of continuous optimization, though, one thing remained constant—search results were driven by an algorithm that weighed popularity heavily. For albums that were iconic releases and sold extremely well, popularity would trump the release date of an album.

Around 2005, though, something started gradually changing in Insound’s product mix—vinyl LPs started selling at a greater rate. By 2007 they were outselling CDs. By 2008 they accounted for the majority of all sales. And yet, that stalwart search results algorithm remained constant.

The result of this fact was that many searches for popular artists with deep catalogs and sales history on Insound were yielding old CDs as the top result, since those were disproportionately popular products (years earlier). Five years earlier, this would have made perfect sense.

However, as sales migrated increasingly to a new (old) format, vinyl, Clearhead hypothesized that the search algorithm should be revisited. The test we planned focused on how to weigh release date (which we called recency) and popularity and whether or not vinyl as a format should trump all.

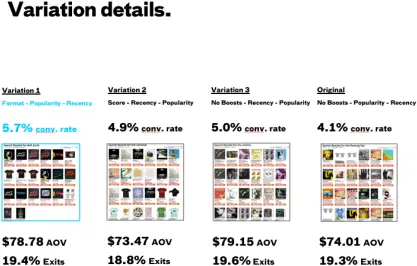

While many think about Optimizely as a dead-simple way to manipulate front end design changes in experiments, we used Optimizely as a “quarterback” of sorts. We helped Insound define four permutations of the search results page, based on different factors weighing the algorithm. Each of those permutations was then assigned a variable that we passed through to Insound. Optimizely ostensibly ran command and control to manage which traffic was seeing which search results, helped us with targeting (excluding certain behaviors and cell phone traffic) and, of course, helped us measure for significance.

And the results? Our instincts to experiment and disrupt were greatly rewarded. The results variation that boosted vinyl as a format, and then weighed release date over popularity, beat out the control’s conversion rate by 39% and beat the next best variation by 14%. This variation also enjoyed a slight bump in AOV, which was not a test metric but was further confirmation that it resonated with customers.

Perhaps the greatest benefit of the test, though, was the model it put in place for future, continuous experimentation around search results. By using Optimizely’s A/B testing tool to pass variables back to Insound to inform how search results are rendered, we put the client on the path to begin diving deeper into new hypotheses around search behavior. Very quickly we developed new thinking around how price sorting or format sorting would change based on other data we have about the customer such as previous add to cart and order history. So, while this test identified a winner, the opportunity to continue to use Optimizely to segment further and target search results better was the real win!

Search results for “Cat Power” with the winning search algorithm variation.