Avoid Wasting Resources with this 7-Step A/B Testing Process

After optimizing for digital media audiences at Sky and Dailymotion, I’ve learned the importance of a strong A/B testing process to drive effective optimization. Follow this 7-step process for effective A/B testing to ensure that your tests are meaningful, that resources are not wasted, and that you know what to expect each and every time you get ready to run an experiment.

Richard Eckles

Knowing where to start when it comes to A/B testing can be challenging. However, the important point to remember is that the people and processes in place are just as vital to testing success as the tool that you’re using.

Knowing where to start when it comes to A/B testing can be challenging. However, the important point to remember is that the people and processes in place are just as vital to testing success as the tool that you’re using.

Between my previous experience in optimization at Sky to my current role at Dailymotion, I’ve learned that by carefully planning out each A/B and multivariate test from the beginning, the execution and outcomes of the experiment will more easily fall into place. Because the process behind effective optimization and testing is so essential, I’ve put together this 7-step process for effective A/B testing, which help you generate more meaningful results, avoid wasting resources, and ensure that you don’t lose organizational knowledge.

Step 1: Planning

The planning phase underpins an entire test and is arguably one of the most important steps to take to ensure that you will generate meaningful results you can act on. Every test must have a hypothesis, which should articulate the problem are you trying to solve with the test. A strong hypothesis will also propose the solution to the problem, and make a prediction about an expected result.

Here’s an example of a bad hypothesis:

Reducing the size of the homepage thumbnail images will increase our visitor click through rate.

And a good hypothesis:

The click through rate on the home screen thumbnails is low. By reducing the size of the thumbnails, we will display more videos above the fold and increase content discovery, therefore increasing the visitor click through rate.

The good hypothesis above identifies a quantifiable problem (a low click through rate on the home screen thumbnails), considers a solution (reducing the size of the thumbnails and moving more videos above the fold), and predicts a result (increased content discovery). Simple!

When planning your experiment, also consider how you will track your goals, which audience segments you want to test on (if any), and how you are going to segment your experiment results data to enhance your learnings.

Step 2: Design

This step involves designing your A/B test variations and gaining internal approval to test them. Design as many potential solutions as you need for the hypothesis of the test, creating one or more variation for each variable you want to change in the test (learn more about A/B/n testing vs. multivariate testing).

Getting the go-ahead from the rest of the team or other people within your organization to test these variations is essential. Make sure that anything you test is a change you would feel comfortable implementing longer-term on your website or in your mobile app. Without internal approval, you risk wasting resources and time on preparing for and running a test that may, in the end, never have an impact on your customer experience—no matter how amazing the results could be.

Step 3: Build & QA

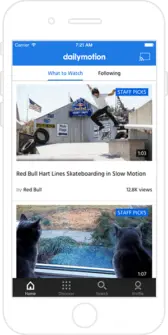

Dailymotion’s iOS App

Building an experiment is easy with a product like Optimizely. After deciding upon the design variations, create them, along with any necessary custom tracking, in the testing or analytics tool. After the variations have been created, QA the test in all major web browsers or on a beta version of your mobile app.

What custom tracking should you consider setting up as part of building your A/B test? In a test we ran on Dailymotion’s native iOS app, our team implemented a custom event in Optimizely to track when a homepage thumbnail was clicked. The event was set as our primary goal, and was also implemented in Google Analytics to give us flexibility when segmenting results.

In this instance, we had already integrated Google Analytics with Optimizely, so we were able to easily map a custom dimension to the experiment in Optimizely. By using Optimizely’s preview tool, we could then QA the experiment to ensure it was working as expected.

Step 4: Launch

This step is fairly self-explanatory: at this stage of the process, set the experiment live and wait for the results to come in.

Step 5: Analysis

It’s time to review the results of the test. Are the results statistically significant? If not, consider running the test longer to reach a statistical significance threshold.

Challenge the data that you get from an A/B test. If you see no improvement when looking at high-level data, try segmenting the results by audience attributes such as traffic source, device or country and see if the variation(s) performed differently for these different segments. Remember not to treat every user the same, and to draw analysis and insights that can link back to the hypothesis that was formed in Step 1.

For example, for the hypothesis detailed in Step 1, if the results show an uplift in our success metric (homepage click through rate), with statistical significance, then we have clearly demonstrated that reducing the size of the thumbnails generated an improvement in content discovery, and that we have proved the hypothesis to be true.

Step 6: Action

After analyzing the results, acting upon them is the next logical step to take in your A/B testing process. Is there a clear winner in the A/B test? Is the test generating more questions than answers?

Answering both yes and no to these questions is fine. If there isn’t a clear winning variation, there is still insight to be gained, since your hypothesis has been proven untrue. However, if there is a clear winner, decide whether it is best to roll out the new experience or run an iterative test to build on the success of the test and try and drive an even greater uplift. If even more questions are generated, use these to guide the direction of future tests you may want to start planning.

Step 7: Document

Unfortunately, this last step often gets forgotten. However, there are many benefits to documenting test plans, results, and actions.

Documenting lets you learn from past A/B and multivariate tests and avoids tests being repeated further down the line when they may seem like a distant memory. Having an organized and accessible record of historical tests means that no insight is ever wasted. Whether the test is recorded as a success or if it showed inconclusive results, it’s important to have a note of where to improve and what can be done differently the next time around.

You might find that some tests don’t need to follow all seven steps to be successful, but it’s a useful guide to refer to. By keeping tests simple and data driven, without too many KPIs, and by getting the rest of the team onboard, you will soon be on your way to testing success. And remember—you can’t fail when testing, there is always insight to be gained!

To read Richard’s quick guide to A/B testing and to see the infographic in full, visit Richard’s website here.