Ask an Experimenter: Claire Vo, VP of Product at Optimizely

Welcome to the first installation of Ask an Experimenter, a new series where we interview experts about how they are building a culture of experimentation at their companies. Our first interview is with Claire Vo, Optimizely’s VP of Product. Tell us a little about yourself. I’m the VP of Product at Optimizely, overseeing our product

Claire Vo

Welcome to the first installation of Ask an Experimenter, a new series where we interview experts about how they are building a culture of experimentation at their companies. Our first interview is with Claire Vo, Optimizely's VP of Product.

Tell us a little about yourself.

I'm the VP of Product at Optimizely, overseeing our product and design teams and partnering closely with our engineering group to deliver the world's best experimentation platform. I've been running experimentation programs for a very long time, and was CEO and founder of a company (Experiment Engine) entirely focused on scaling enterprise experimentation. I'm also the person accountable for making sure our product and engineering teams adopt experimentation best practices and grow our program at Optimizely.

What does "experimentation" mean to you?

To me, experimentation is simply the process of using data to validate an idea.

In business, the idea is usually something like "this will make us more money" or "users will love this feature" or "this new product won't break something." If you experiment, you let data tell you if those statements are true. If you don't - you're just guessing.

Who runs experiments in your organization? Where do ideas come from?

There are two teams that run the bulk of experiments at Optimizely. Our marketing team, which focuses on www.optimizely.com and runs personalization campaigns and experiments on our lead generation funnel, and our product/engineering/design team, who focus more on growth, engagement, and product quality.

Since I run the product team, I focus more on the experiments that run on our core applications, Optimizely X Web, Full Stack, and Program Management. It is important to me that everyone at Optimizely engages in our experimentation program, especially product managers, designers and engineers. Because I place such a high value on experimentation, running more experiments and driving adoption of experimentation practices is a key part of my annual objectives. I'm measured on the breadth and velocity of our use of Optimizely here at Optimizely and report on these metrics on a quarterly basis to our leadership team.

As we first started to ramp up our product experimentation, the bulk of ideas came from our product managers. They track their own performance based on user adoption metrics, and experimentation is an obvious way to figure out what moves the lever. I've said that conversion optimization is program management, so it's natural that our PMs have built up a huge backlog of testing ideas at Optimizely.

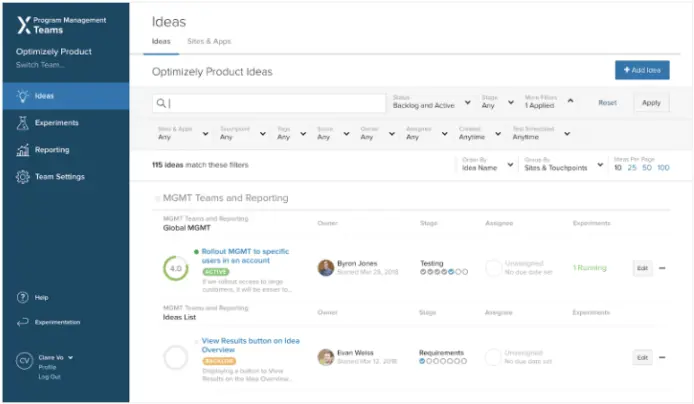

That being said we're starting to get more engagement from across our teams in the ideation process. We use Optimizely Program Management as a central tool for capturing hypotheses, and engineers and designers are submitting ideas for testing at an increasing rate. Another great source of ideas is our technical support team, who get their ideas directly from the customers they speak to everyday.

What is in your experimentation toolkit or stack?

We use Optimizely X Web and Full Stack for testing on all parts of our application. We use client-side testing to get up quick experiments, run personalization campaigns, and do low-friction testing via extensions. We're actually building a whole toolkit of configurable extensions specifically to address new product discoverability; this gives us an easy way to add "new" badges and full page callouts for recently-launched features.

For more complicated experiments, we use Full Stack across our Javascript and Python SDKs. Like many of our customers, we've also recently upgraded to our 2.0 SDKs to take advantage of feature rollouts and environments. This has been awesome for getting engineering engaged in experimentation, because now everything that goes out the door is set up as a feature that can be progressively rolled out (which is a convenient excuse to play Ludacris at our weekly launch review.)

As I mentioned, we also use Optimizely Program Management for all of our ideation, collaboration, and knowledge share. All ideas go into our "Optimizely Product" team in program management, where they are reviewed on a weekly basis. My favorite part about the team using Program Management is getting to see the scores and comments on different experiment ideas. Our Program Management instance is fairly advanced (one would hope so, given I built the platform!), so we use a lot of features like tagging, custom fields, program reporting, and attachment comments to keep ourselves organized.

Of course, data is important for any team generating hypotheses and reviewing results. We use Segment to instrument all of our application tracking (in addition to our application DB source of truth.) We have all this data in a data warehouse PMs query directly to discover insights about user behavior and develop hypotheses for testing. Less technical users, like our designers, review this data in a tool like Google Analytics.

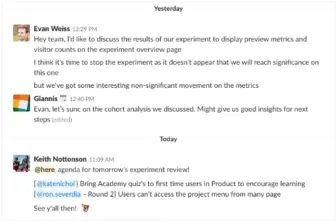

I believe collaboration and transparency are critical in building a Culture of Experimentation. All of our activity from Program Management is sent into Slack so the whole company can track what's happening. We also have a dedicated slack channel for discussing experiments, getting help, and coordinating experiments across the team.

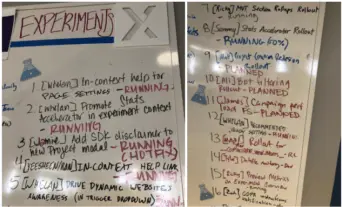

Finally, my favorite tool in our experimentation stack: our giant whiteboard with a numbered list of all the experiments we've run in a quarter. I love watching this get filled in over the course of a few months, and it's fun to see how it motivates the team to hit the experimentation velocity goals.

What was the most surprising or unintuitive result you've seen?

I've been running product teams for a long time and was able to observe thousands of experiments as the CEO of Experiment Engine. I've realized over the course of the years that it's almost impossible to predict what will happen in a test, and you can waste a lot of emotional energy (and time arguing in meetings) trying to guess what will win. That's why when I built the scoring framework for Program Management, I put in the "love" component. It lets people state their opinion of what might work, without spending too much time discussing it.

That being said, I've never seen an orange CTA perform worse. The second I see an orange button lose, I'll be surprised.

How many experiments are you aiming to run this year?

In the product: 100, including feature rollouts. It's a bit of a stretch goal, but I think we can do it!