Defining your experimentation program: Templates for team structure, key contributors and KPIs

When starting out your experimentation program, there are a few key items to get in place foundationally:

Becca Bruggman

- Who are my key stakeholders? Who (individuals and teams) will contribute to experimentation?

- What structure will my team have? Will it be centralized or dispersed?

- What are the key metrics I’m looking to impact? Where are the areas of my business where I want to accelerate learning?

- How will I onboard new people about experimentation?

The first question is an important one to answer before getting into team structure. Who is willing to and able to contribute to experimentation will greatly influence how you structure your team.

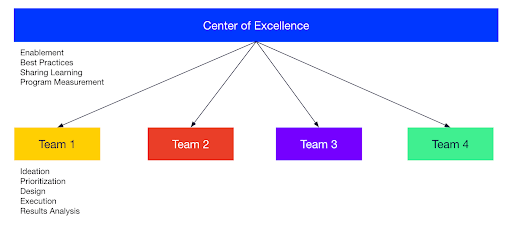

At Optimizely we have a hybrid model with an overarching Center of Excellence, which can be thought of as an experimentation consultancy within a single organization, as well as an Individual Team that sits within the Center of Excellence. Our Product and Marketing teams fall under this Center of Excellence umbrella and the “teams” that the Center of Excellence serves are broken up into our Product Areas, cross-functional teams called Squads that include Designers, Product Managers and Engineers, and the entire Marketing site is its own “Team”.

Optimizely’s Center of Excellence Structure

As the Program Manager for the Center of Excellence, my role is to enable new team members, share learnings, improve overall process and share learnings. Within our Product organization, our Squads handle most of their own ideation, prioritization and executing for experiments. Because of this structure, to get these Squads to run experiments I must influence my key stakeholders within each of these squads (aka the Product Manager) to prioritize launching experiments. In order to best be able to influence them to prioritize running impactful experiments, I have to understand what they are measured on and gets them promoted.

Center of Excellence Pros and Cons

- Pros: I don’t personally have to prioritize and build the experiments. I can mainly focus on overall program improvement and visibility.

- Cons: Less control over experiment velocity. Experiments have to be impactful enough for these teams to prioritize them themselves against other product work. I have to constantly look for ways to influence their roadmap and resourcing without having control over it. It requires very clearly defined processes for the team to follow to create consistency in experimentation practices. Strong Executive buy-in and support usually needed.

On the Marketing team we have what’s called an “Individual team” which rolls up into the larger Center of Excellence. You can think of this as another Squad, but for the entire Marketing site at Optimizely. As the Program Manager here, we focus more on gathering as many experiment ideas as possible across the organization, then prioritizing the best ones and building them.

Individual Team Pros and Cons

- Pros: The ability to prioritize and build the experiments. More control over experiment velocity.

- Cons: The need to ensure there are “pull” mechanisms for continuing to get enough experiment ideas into the backlog to prioritize. We need ongoing dedicated resources to build experiments and do QA. More time is spent enabling members outside of the core team if you are seeking to empower everyone to run experiments.

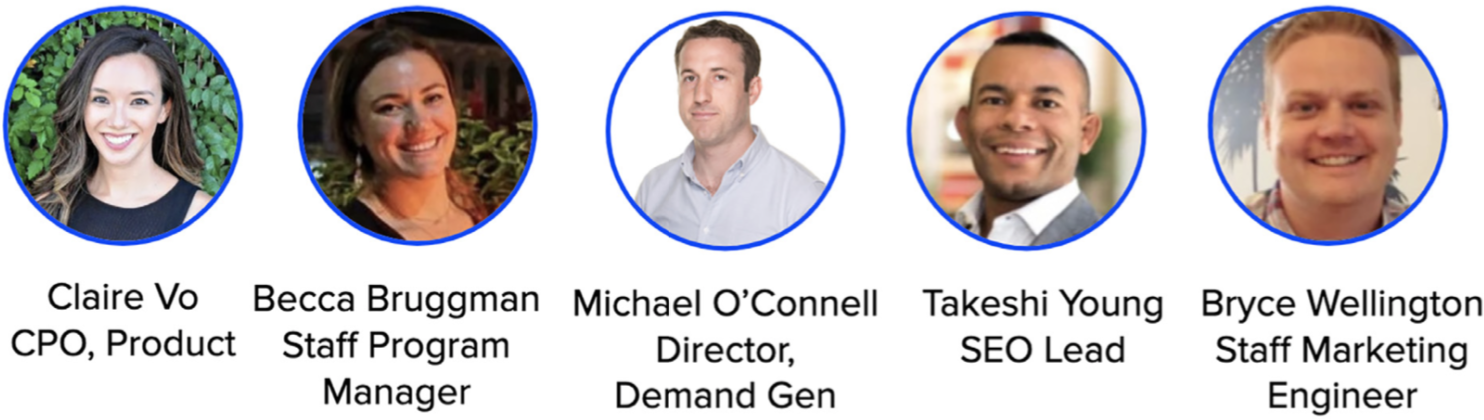

This is what works for us given the key stakeholders we have in place across Product and Marketing, which you can see below.

Key Experimentation Stakeholders at Optimizely – Product and Marketing

On ADEPT (A Design Engineering Product Team), we have our Chief Product Officer, Claire Vo, in place as our executive sponsor who can influence with authority the Product Managers to prioritize experiments within our Center of Excellence. Meanwhile, on Marketing we have our Director of Demand Gen, Michael O’Connell, in place as the executive sponsor and Takeshi Young, Website Producer & SEO Lead, and Bryce Wellington, Staff Frontend Engineer, who contribute to experiment build within our individual team. Additionally, I work to enable Optinauts (what we call ourselves here at Optimizely) across the organization to run client-side experiments, so that even more people can contribute to experiment build. As the Program Manager, I’m shared across both teams which helps with streamlining processes and finding opportunities for collaboration across all customer touchpoints.

In the RASCI and program-level documentation template included in Section 1 of the Toolkit, you can map out your key stakeholders and what level of contribution they would be making, similar to the above. It is never too early to start thinking about who you have on your team and what structure would make the most sense for you and your team.

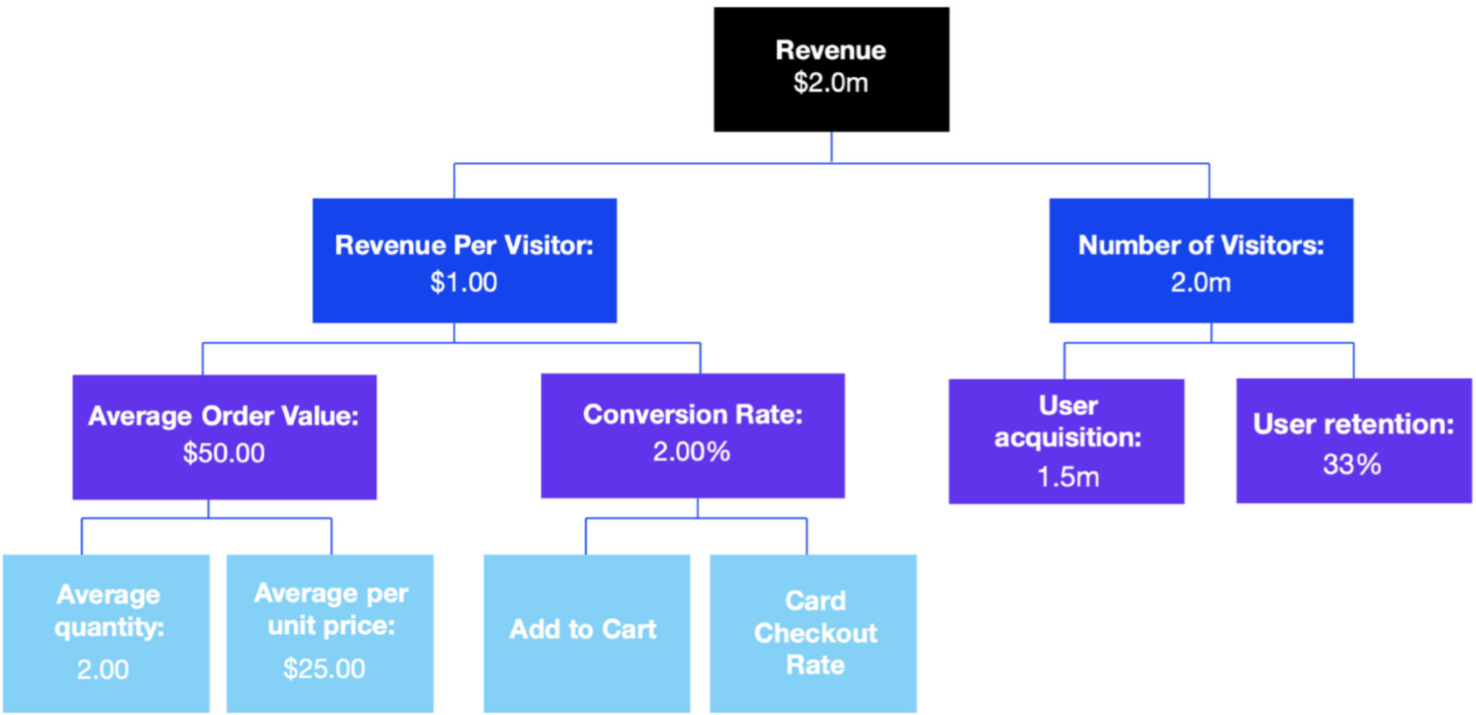

So, you’ve thought about the stakeholders and contributors to your program as well as what structure works best. Now let’s talk about a goal tree! We have a great post on our Knowledge Base covering how to build a goal tree and why. You’ll also find a template for building one for your own team in the Toolkit. To summarize: Identifying the metrics you can move through experimentation that track back to your top line key performance indicators (KPIs) [such as revenue or sales accepted leads (SALs)] can go a long way to show the impact of your program and ensure you are focusing on the right metrics. Starting with experiments that have the potential to garner a lot of learnings toward the most important metrics for your organization is a great beginning for experiment ideation, which a goal tree supports – more on that in a later post!

I’ve included two representations of a goal tree below for you to get a visual representation of what these look like and how to build them for your own team:

Goal Tree with Top Level KPI to Experiment Metrics

Now, let’s bring all of that program level information together into one place! To clearly document the key stakeholders and top level information for the program, we have an internal resource that captures everything all in one place. This is helpful for onboarding new Optinauts as well as having a single source of truth for all the top level program information. This can include a summary of all onboarding documentation, a charter for your experimentation program, goal trees and relevant meetings, slack channels and presentations. I encourage you to aim to update this once a quarter so the information stays up-to-date. A template for this is also included in the Toolkit so you can have program level documentation relevant to your team as well.

How are you implementing this for your own team? Comment below or tweet me @bexcitement.

See you in the next post: How to build, QA and launch experiments at scale! (Hint: lots of documentation and process!)