A/B Testing Internal Site Search for Stock Photos: Exact Match vs. Fuzzy Match

We interviewed Boris Masis a program manager at Bigstock, a subsidiary of Shutterstock, about testing culture at Bigstock, how the team prioritizes test ideas, and a unique test on their internal search algorithm that dramatically improved the user experience for Bigstock.

Cara Harshman

Boris Masis is a program manager at Bigstock, a subsidiary of Shutterstock. I interviewed him about testing culture at Bigstock, how the team prioritizes test ideas, and a unique test that dramatically improved the user experience for Bigstock.

Boris Masis is a program manager at Bigstock, a subsidiary of Shutterstock. I interviewed him about testing culture at Bigstock, how the team prioritizes test ideas, and a unique test that dramatically improved the user experience for Bigstock.

Optimizely: How long have you been A/B testing?

Boris Masis: We’ve been testing for about a year. While searching for a testing platform, we looked at VWO and Optimizely. We got started with a demo from Optimizely and fell in love. Since then, testing has played a significant role in our roadmap. It’s part of our product rollout strategy. For example, we just introduced two fairly large features that we tested first: new checkout flows, and a brand new search algorithm. Testing ensures that the work is adding value and quantifies that value. Of course, we also do testing when we are unsure of which path to take.

Optimizely: What’s your testing process like?

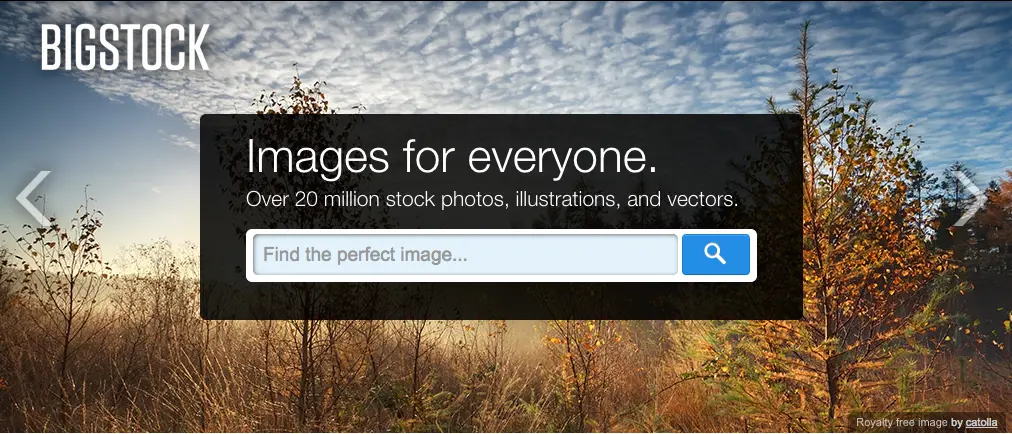

A snapshot of the A/B testing process at Bigstock.

Boris: We have a white board where projects go from “planning,” to “in development,” to “A/B tested,” to “fully delivered.” We try to run every large project through A/B testing before we call it done to make sure it’s actually helping.

“It’s easy to make A/B testing a part of your development flow and process. After you’ve done the first few tests, its natural for you to want to continue. It gives very tangible results and helps you see what impact you’re making.”

–Boris Masis, Program Manager, Bigstock

Optimizely: How have you built a testing culture throughout Bigstock?

Boris: From a developer point of view, it’s been really well perceived and embraced. People are excited to monitor results. We have a stats TV in the office that rotates through the metrics and there are usually one or two Optimizely results in there. It’s great to have measurable outcomes we can talk about. A few months ago, we got the whole Bigstock group together to watch the Best Practices & Lessons Learned Webinar recording. People who aren’t even working on the site can get a sense of how the process works, and give their ideas. It’s a little bit of a challenge to get everyone engaged, but it’s pretty informal. When we’re not sure of something, testing is the obvious answer.

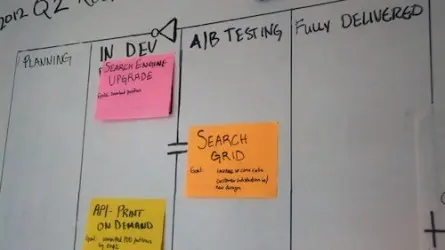

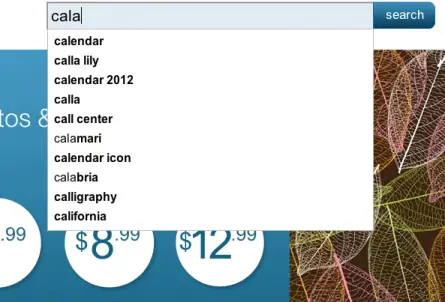

Case study: With Fuzzy Search Algorithm Search Results 10% More Accurate

As a stock image website Bigstock and our parent company, Shutterstock are always trying to suggest the most accurate search results. There’s definitely some amount of serendipity involved in searches and people use many methods to arrive at what they’re looking for. We wanted to test the performance of a new search algorithm that would lead people to exactly what they were looking for. A developer designed a “fuzzy auto suggest” algorithm that guessed the intended search term if the word was spelled wrong (for example, “calendar” is commonly misspelled on our site). We tested it against the “exact auto suggest” algorithm that populated results based off the exact search term. Like any successful experiment, the test started with a goal.

Goal

Our goal was to determine whether a fuzzy suggest algorithm would present better results to users and improve the overall user experience. So if the algorithm presents the word you actually meant, are you more likely to select it? We served as a proxy for a lightweight test that would also apply to Shutterstock.

Hypothesis

I didn’t know whether it was going to make a difference or not. Usually when we do a new design, the winner is fairly obvious. For this test, I didn’t have an intuitive sense.

Measurement

We measured the success of the test based on the total number of results selected.

Original (Exact Auto Suggest)

Variation (Fuzzy Auto Suggest)

Results

People selected results from the the fuzzy auto suggest 9.6 % more often. We saw significant improvements throughout, including a 6.52% increase in the number of images added to the cart, and a 3.2% increase in downloaded images.

We ran the test for five days. Normally we run things longer, but this was a very high traffic experiment with clearly separated conversion rates. We also looked further downstream with Google Analytics at how it affected the rest of the image selection and purchase process. There we track many additional details about user behavior.

4 Key Testing Takeaways

- You need to test because sometimes your intuition is wrong.

- Interpreting results is difficult and the more supporting data you have the better, in other words, integrate your analytics platform.

- It’s easy to make A/B testing a part of your development flow and process. After you’ve done the first few tests, it’s natural for you to want to continue. It gives very tangible results and helps you see what impact you’re making.

- Focus on the big pieces first and do exploratory work before optimization work. For example, try out entirely new algorithms or page layouts first, and then do smaller tests like tweaking buttons.