The new content operating model

How AI is redefining enterprise content operations and orchestration

“Content is king.” – Bill Gates, 1996

Content may still be king, but in today’s AI-driven landscape, it rules a far more complex

kingdom. Discovery is shifting from traditional search engines to generative systems.

Content teams are being pushed to produce more, collaborate faster, and maintain clarity

across an expanding ecosystem of channels and formats. The foundations that support

content creation, including structure, organization, and governance, have never mattered

more.

This report explores how AI is reshaping content discovery, and why structured, machine-

readable content is now essential for visibility. It examines the operational foundations

that enable effective content work, including stronger processes, better collaboration, and

disciplined organization that improves reuse. It also highlights how AI agents, coordinated

workflows, and governed prompts can scale production and deliver more consistent

outcomes at speed.

Enriched with expert insights and data-backed benchmarks, this report provides a

practical roadmap for building modern content operations that are ready for the age of AI.

Introduction

Fewer than 30% of marketers feel they have the tools and systems to manage content effectively across their organization.

Content volume to meet customer needs is expanding faster than most organizations can manage. AI has accelerated production, channels continue to multiply, and marketing teams are expected to deliver more with fewer resources. Yet, the systems and workflows that support content creation have not kept pace. Many companies now face a widening gap between the volume of content the business demands, and the operational maturity required to deliver it.

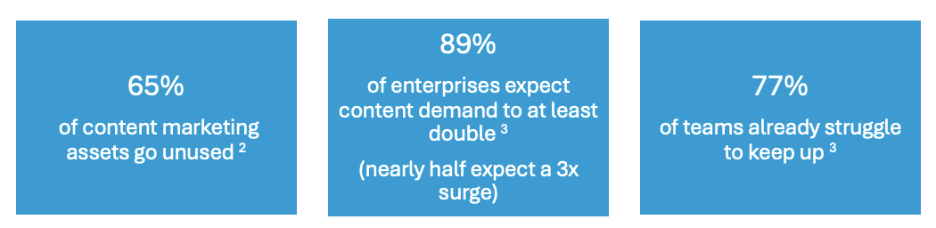

The data is telling.

The challenge is no longer just producing content. It is ensuring content can be found, understood, and reused across the organization and across an expanding set of AI-driven discovery systems. Visibility now depends on how clearly content is structured, how well assets are organized, and how consistently teams apply metadata, templates, and instructions.

Three foundations matter most:

-

Structure: Content broken into reusable components that both humans and machines can interpret. This includes clear hierarchy, consistent metadata, and schema markup that help search engines and AI systems extract and reuse meaning.

-

Organization: Systems, assets, and workflows connected and managed to reduce duplication, improve findability, and enable efficient collaboration across teams, tools, and regions.

-

Governance: Shared standards, prompt practices, and review controls that keep content accurate, consistent, compliant, and ready for AI-driven automation.

Together, these foundations form the basis of effective content operations. They connect planning, creation, storage, and delivery into a unified operational model, so content is not only produced but prepared for discovery, reuse, and scalable delivery.

With these foundations in place, teams can streamline handoffs, scale execution with agents, and improve performance as AI reshapes how content is created, delivered, and discovered.

Mastering content discovery in the age of AI

Discovery has changed. Large language models (LLMs) now act as primary interpreters of online content, scanning, summarizing, and selecting the information that ultimately reaches users. In this era, visibility depends less on rankings and more on how clearly content can be interpreted by AI systems. To stay discoverable, organizations must rethink how they create, organize, and maintain content so it can be accurately indexed, cited, and reused by both humans and machines.

This section explores two critical shifts shaping modern discovery:

-

How AI has transformed search behaviour and why GEO now determines visibility.

-

How content must be structured so AI systems can interpret, trust, and surface it consistently.

GEO and changing discovery patterns

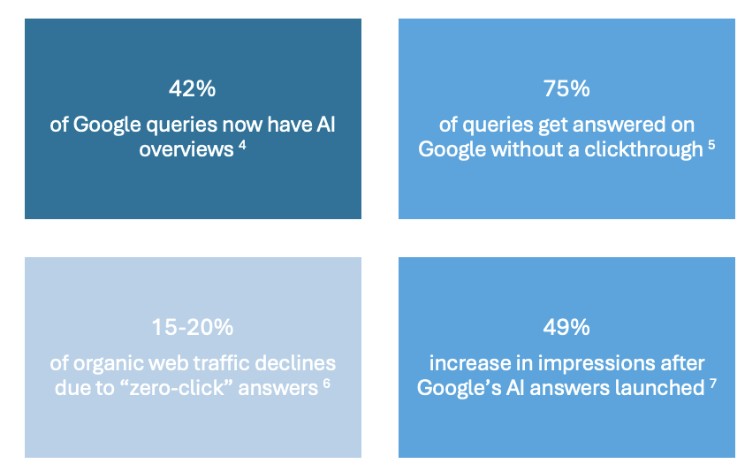

Search has changed more in the last two years than in the previous twenty. AI overviews, zero-click answers, and generative summaries increasingly satisfy users without a visit to a website. Rankings and click-through rates still matter, but they no longer represent the full picture of visibility.

Discovery is also expanding into a new channel: answer engines. These are LLM-powered systems (such as ChatGPT and Perplexity) that generate responses by reading, extracting, and synthesizing information from across the web. For users, that means summaries, comparisons, and recommendations often appear before they ever reach a brand’s site.

Users are no longer scanning results pages for the best link.

From SEO to GEO: how generative discovery works

For marketers, this shift changes the rules of visibility. Content must now be structured, machine-readable, and authoritative enough for AI systems to understand and reuse.

Generative Engine Optimization (GEO) defines the new standard for visibility. While SEO focused on rankings and click-throughs, GEO focuses on how effectively content is interpreted, cited, and included in AI-generated answers.

Many of the practices that improve visibility in answer engines are familiar SEO disciplines: clear structure, strong metadata, clean schema, and content that stays current. What has changed is the operating requirement. Teams now need to keep far more pages consistently structured, maintained, and ready for machine interpretation, all the time.

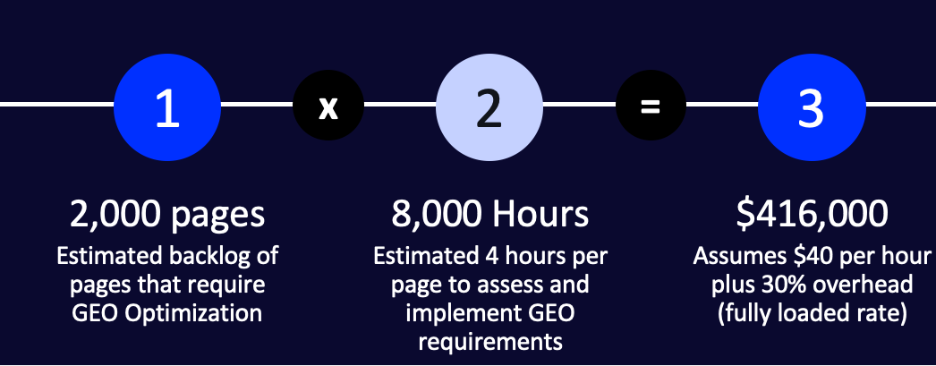

The constraint is the human cost of doing this manually. At enterprise scale, even manageable backlogs become material investments. For example, a 2,000-page backlog of pages to be GEO-optimized at an estimated 4 hours per page translates into 8,000 hours, or roughly $416,000 in fully loaded labor cost (based on a $40 per hour rate plus 30% overhead).

Human cost of manual GEO at scale

If your content is not structured and machine-readable, it can lose visibility in the answer engine channel, even if it still ranks in traditional search.

In this environment, websites must now serve two audiences simultaneously:

-

Humans who arrive with higher intent to validate answers or complete tasks.

-

AI agents that crawl, classify, and extract structured information to reuse in generative responses.

To meet both needs, content must deliver dual value:

|

AI Needs |

Human Needs |

|

Structured for machine parsing |

Compelling and clear |

|

Consistent metadata |

Concise for fast scanning |

|

Semantically rich for retrieval |

Natural in tone |

GEO exists because AI systems do not browse pages – they scan, extract, and synthesize. This makes structure, metadata, and semantic clarity the new determinants of visibility. Content that cannot be reliably interpreted by AI cannot be reliably surfaced.

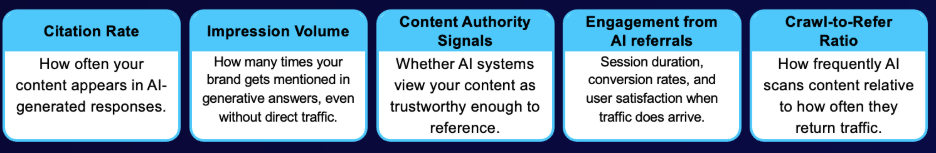

GEO metrics that determine visibility

Traditional SEO metrics are no longer reliable indicators of visibility. New signals matter more in a generative search environment:

These metrics matter because AI systems reward clarity, structure, and semantic richness rather than clicks or rankings.

One of the clearest leading indicators for GEO is the crawl-to-refer ratio, which shows how frequently AI systems scan content, relative to how often they return traffic. AI models may scan hundreds to tens of thousands of pages for every visit they return.

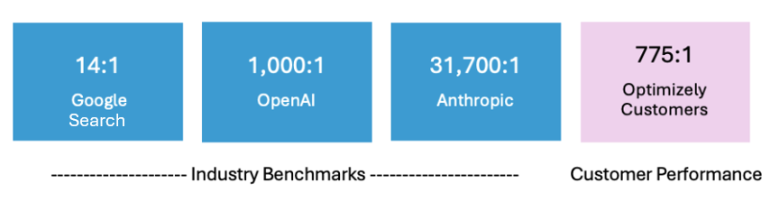

The contrast between platforms is stark. According to Cloudflare data8, Google operates at roughly a 14:1 crawl-to-refer ratio, meaning that for every 14 times Google crawls a site, it sends traffic back once. By comparison, OpenAI exceeds a 1,000:1 ratio, scanning thousands of pages for every single referral. This shows that marketers must work dramatically harder to earn a single AI referral than a traditional search click. In generative discovery, only the most structured, interpretable content is eligible for reuse. Everything else is crawled but rarely surfaced.

Example: Crawl-to-refer ratios by discovery model (Cloudflare Radar)

The implication is simple:

Only content that is clear, structured, and machine-readable achieves meaningful visibility in the AI era.

Analysis of internal data shows that when LLMs crawl Optimizely customer sites, that crawl activity converts into referrals far more efficiently, resulting in an average crawl-to-refer ratio of 775:114 across answer engines, 29% better than the typical OpenAI-crawled site and nearly 40× better than the typical Anthropic-crawled site.

Content structure as the foundation of GEO

If discovery now depends on how well AI systems can interpret content, then structure becomes the foundation of visibility. Generative engines cannot reuse what they cannot parse, so content must be organized in ways that are predictable, machine-readable, and semantically rich.

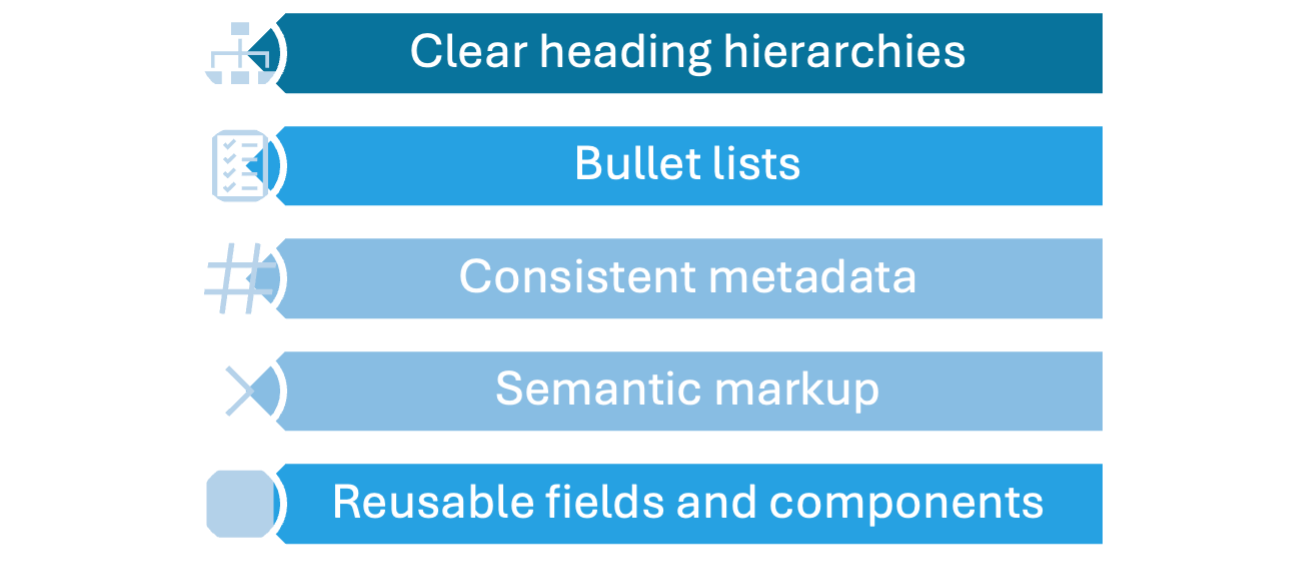

This requires moving away from long, unstructured pages and toward modular content made up of reusable fields and components. Each element should stand on its own, be easy to extract, and follow consistent patterns that AI systems can reliably interpret.

Structured content is content that is organized, formatted, and enriched in ways both humans and AI can understand.

This includes:

Instead of dense paragraphs, information is broken into smaller, purposeful elements that generative engines can scan and recombine into answers. This same structure also improves human comprehension, strengthens trust signals, and enables cleaner omnichannel delivery over time.

Practical patterns for generative engines

AI systems do not infer meaning on their own. They rely on clear structural signals to understand what content means, how it is organized, and when it should be reused. Analyses of LLM behavior show that models consistently favor content that follows predictable patterns, such as:

-

Bullet lists that provide extractable facts

-

FAQ formats that map cleanly to conversational prompts

-

Short, explicit paragraphs with clear claims

-

Consistent metadata and schema that reinforce meaning

Humans prefer many of the same qualities. Readers engage more deeply with content that is:

-

Scannable and easy to navigate

-

Clearly labeled and consistently formatted

-

Free of duplication and outdated information

-

Simple to update and reuse across channels

This alignment is powerful. The structure that improves human comprehension is the same structure that improves machine interpretation.

Why structured content improves clarity, trust, and reach

Traditional web content was designed for humans, not machines. It was written as long, static pages that were difficult to update, hard to reuse, and nearly impossible for AI systems to interpret reliably. In an age where generative models increasingly decide what users see, this approach is no longer sustainable.

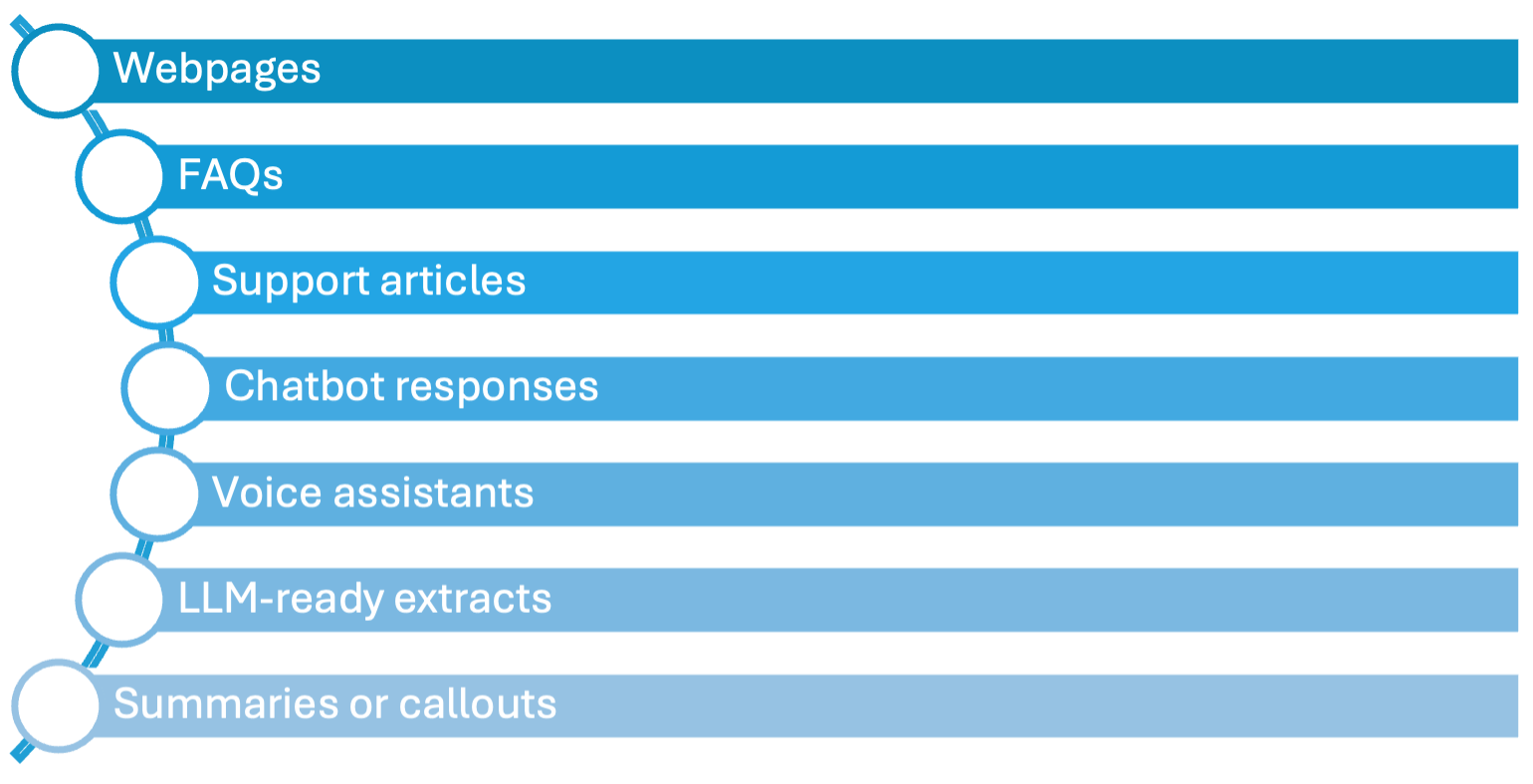

Structured, component-based content replaces monolithic pages with modular building blocks that carry clear meaning, consistent metadata, and predictable formatting. These blocks become a single source of truth that can power webpages, FAQs, support articles, chatbot responses, voice assistants, LLM-ready extracts, and every downstream experience that depends on accurate information.

One source becomes many outputs. As AI systems increasingly consume and reuse content, this is the only scalable way to work.

This approach does more than make AI’s job easier. It transforms how teams create and maintain content. When creators assemble experiences from reusable components instead of rebuilding pages from scratch, production accelerates, duplication drops, and updates can be made in minutes instead of days.

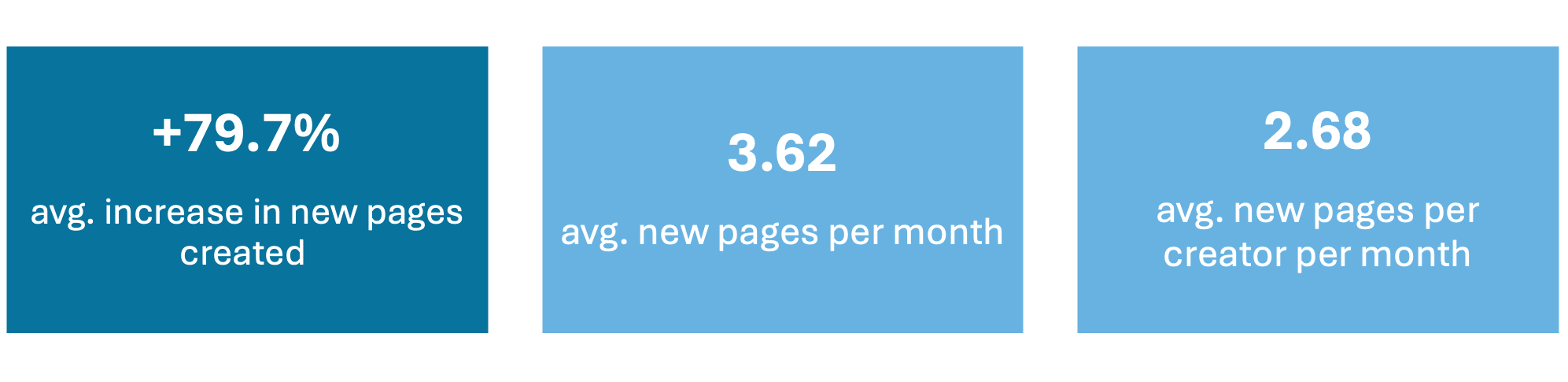

Organizations adopting structured, component-driven workflows inside Optimizely’s Content Management System (CMS) saw:

These gains are the result of cleaner operations: fewer rebuilds, faster assembly, and less time spent tracking down outdated versions.

The composite effect of structured, modular content, then, is both improved efficiency for the teams creating it and increased visibility for the answer engines cataloging it.

This creates a compounding effect:

Clarity improves trust, trust improves visibility, and visibility drives more meaningful engagement.

Content becomes easier to maintain, easier for AI to interpret, and more effective across every channel it supports.

Using AI agents and platform tools to strengthen GEO

Even with strong content structure, achieving GEO excellence at scale is difficult for most teams. Generative engines evolve quickly, best practices shift, and manual audits cannot match the speed at which AI systems crawl and reinterpret content. GEO requires continuous optimization, and this is where AI agents become indispensable. They analyze content the same way generative models do, identifying gaps, inconsistencies, and structural issues that limit discoverability.

Optimizely’s GEO Intelligence Suite provides a set of specialized agents that guide, evaluate, and structure content for how generative engines read and reuse information.

-

LLM Index Agent - Generates an llms.txt file that highlights priority pages and recommended crawling paths, ensuring the most authoritative content is scanned first.

-

GEO Recommendations Agent - Assesses whether a page is GEO-ready by inspecting hierarchy, metadata, semantic cues, and extractability. It offers clear, targeted guidance on what to improve and why it matters for visibility.

-

Schema + Answers Agent - Structures and connects content elements across pages, ensuring that headings, summaries, FAQs, and markup work together so AI systems can understand relationships and reuse content in generative answers.

-

GEO Analytics Dashboard – The GEO dashboard in Optimizely Reporting enables you to view AI platform traffic and optimize your site’s performance. Using the data from GEO Analytics, you can track AI traffic trends, identify frequent AI agents, and discover popular webpages among AI platforms. This lets you refine your content for AI optimization and leverage high-performing pages to improve overall engagement.

Together, these agents reveal how AI systems perceive a site, where meaning is lost, and which structural improvements will deliver the strongest gains. This reflects the core principle of GEO: optimize for clarity, predictability, and machine relevance.

The effect is transformative. Teams no longer guess what to change or rely on sporadic audits. They receive continuous, AI-driven recommendations and apply them through structured components, creating a consistent site-wide architecture that improves crawl efficiency, citation likelihood, and the quality of referral traffic.

AI agents do not replace human judgment. They elevate it by providing the precision and pattern recognition needed to succeed in a landscape where interpretability determines visibility.

The impact of GEO

The impact of getting GEO right is immediate and measurable. When content is structured in ways AI systems can interpret, they return to it more often, reuse it more confidently, and surface it in more answers.

Across Optimizely’s customer base, AI systems generated more than 2.67 million referrals in just three months (June - August 2025). The clearer the content structure, the more frequently it was cited and resurfaced.

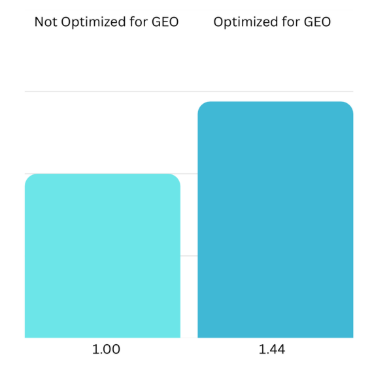

Structural improvements magnify this effect. Our analysis shows that organizations that applied GEO-driven fixes identified by AI agents saw a 44.3% improvement in crawl-to-refer ratios, meaning generative engines required fewer crawls to understand and reuse their content. As interpretability increases, citations rise, and the traffic that arrives is significantly more intentional and conversion-ready.

This efficiency compounds downstream.

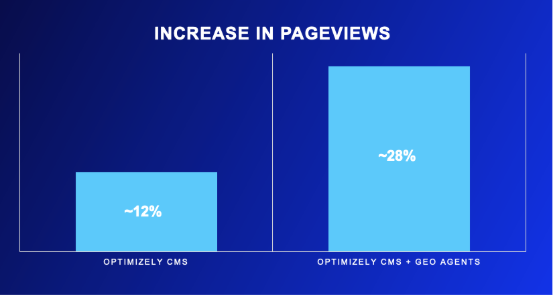

Across implementations:

-

Companies see 12% annual pageview growth when adopting structured content practices.

-

When GEO-aligned improvements are added, growth rises to 28% annually.

These gains illustrate a simple truth:

When AI can understand your content, it is far more likely to trust it, reuse it, and send qualified visitors back to you.

GEO turns structure into visibility and visibility into performance. It is no longer a technical enhancement, but a strategic advantage in how audiences discover and engage with brands.

A global telecommunications provider modernized key product pages by strengthening metadata, improving internal linking, and adopting clearer content structure. The impact was significant: +61.4% year-over-year pageview growth driven largely by more qualified, AI-referred traffic. This outcome reflects a broader pattern seen across structured-content implementations. When pages are easier for AI systems to parse and interpret, they earn stronger visibility and generate higher-value traffic over time. Structure becomes a direct lever for performance.

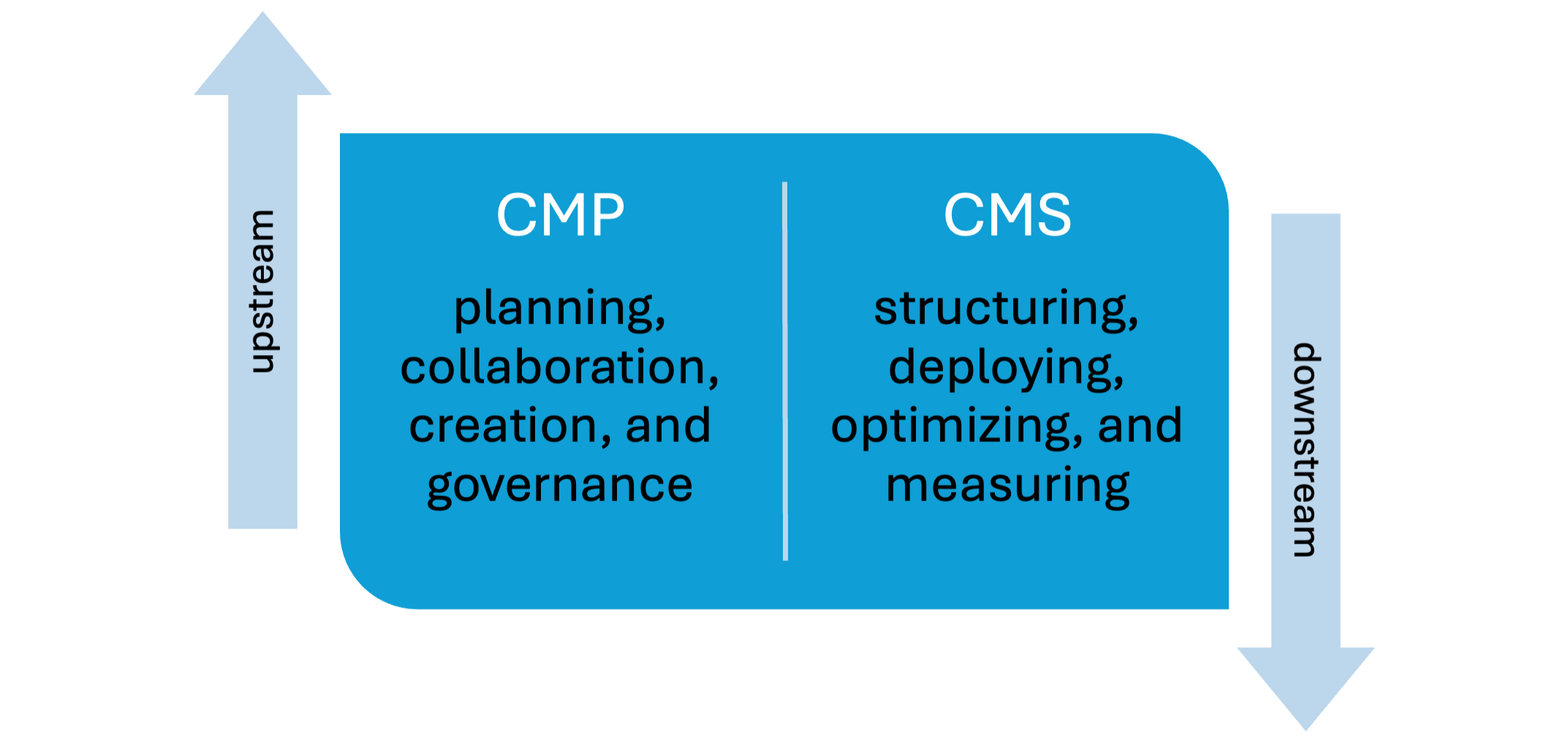

Strengthening the foundations of content operations

Discovery may determine who sees your content, but the real leverage comes from the systems and workflows that shape how content is created, structured, and maintained long before it reaches an AI model. Most of the friction that undermines GEO performance lives upstream. Siloed teams' slow production, inconsistent workflows limit reuse, and scattered assets make it difficult for both humans and AI systems to find and interpret information. When these foundations are weak, even well-optimized content struggles to achieve visibility.

To scale in the AI era, organizations must strengthen the operational foundations that allow content to move efficiently, remain consistent, and be reused across every channel.

This section examines two shifts that determine operational readiness:

-

How improving workflows, collaboration, and shared context accelerates content production and reduces duplication.

-

How disciplined organization of assets ensures content can be reliably found, reused, and interpreted by both humans and AI systems.

Improving workflows and collaboration

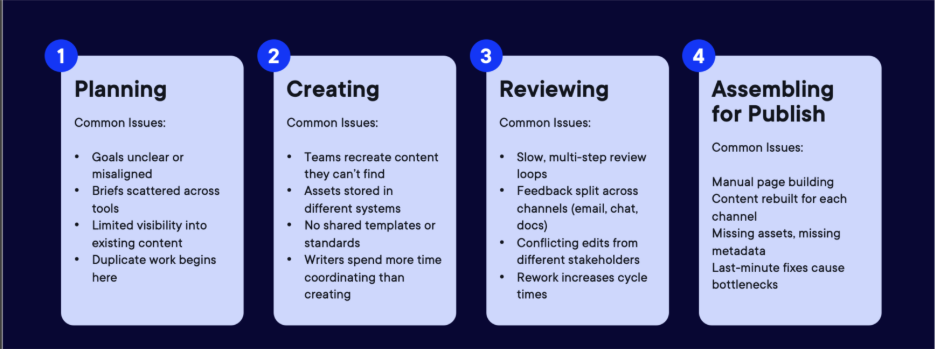

Content creation remains the slowest and most constrained part of marketing. Demand continues to rise across every channel, yet the workflows that support planning, creating, reviewing, and assembling content have not evolved to match this scale. Teams spend more time moving work forward than creating it, and the gap between business demand and operational capacity continues to widen.

The data tells a clear story:

-

71% of marketers must now produce significantly more content each year.

-

59% of marketing leaders say their teams lack the bandwidth to meet current demand.

-

64% cite fragmented workflows as a major barrier to production.

These pressures create friction at every stage. Reviews take longer, teams recreate content they cannot find, and even small updates require multiple handoffs. Delivery slows, production costs rise, and teams struggle to support new channels or emerging opportunities.

This bottleneck is not a reflection of skill or effort. It is the result of workflows built for a linear, page-based model that no longer aligns with how content must be created, reused, and maintained. In a landscape where content powers every interaction and must remain accurate across both human and AI experiences, traditional production models cannot keep pace.

The cost of silos and scattered teams

As content demands grow, marketing organizations have evolved into a collection of highly specialized teams. Brand, digital, content, product, demand generation, and regional groups each own a different part of the customer experience. But because these teams operate in different tools and parts of the organization, they often work in parallel rather than as a coordinated system.

This creates organizational drag beyond day-to-day production. Even when workflows exist, teams struggle to share context, align priorities, or see what others are producing. Planning happens in pockets. Insights remain local. Decisions are made without visibility into related work.

The complexity multiplies inside businesses with multiple markets, business units, or brands. Each group develops its own processes, templates, and approval paths. The result is duplicated work, diverging messages, and inconsistent quality across touchpoints. What should function as a shared content ecosystem becomes a set of disconnected practices that are difficult to scale or govern.

When teams operate in isolation, the drag compounds across the entire content operation:

-

Collaboration breaks down: Teams work in parallel instead of together, trapping context in siloed tools and conversations and making coordination slow.

-

Consistency deteriorates: Without shared standards or templates, messages drift, quality varies, and content becomes harder for both humans and AI to trust.

-

Clarity erodes: Teams lack visibility into what others are producing, leading to guesswork, rework, and duplicated effort.

-

Impact declines: Output increases without corresponding results. Content is produced but not reused, campaigns slow, and teams struggle to operate at the speed the business requires.

How shared workflows increase speed and quality

When silos slow work down, the instinct is often to add more tools, more meetings, or more checkpoints. But the real unlock is creating a shared operating model that gives every team visibility into plans, clarity around responsibilities, and the ability to move quickly without sacrificing quality. This is the foundation of a “team-of-teams” approach, where collaboration becomes coordinated rather than improvised.

Shared workflows transform how organizations operate by enabling three core principles:

1. Shared consciousness: everyone sees the same plan

In siloed environments, work is planned in isolation. Teams only see their part of the puzzle, leading to duplicated efforts and misaligned launches.

Shared workflows change this by giving teams unified visibility into:

-

Upcoming initiatives

-

Ownership and dependencies

-

How work across teams connects

This shared context reduces duplication, aligns messaging, and ensures insights move freely across the organization, creating a single operational truth instead of scattered pockets of information.

2. Empowered decision-making: work moves with less friction

When processes are standardised and expectations are clear, teams can make faster, higher-quality decisions without waiting on disconnected approvals.

Shared workflows define:

-

The path work should follow

-

Where handoffs occur

-

What “ready for review” actually means

This eliminates the review-cycle slowdowns that plague most content operations. Teams no longer chase approvals or manually assemble context. They move confidently because they understand the broader picture, not just their part of it.

3. Agility at scale: collaboration becomes structured, not ad hoc

Shared workflows don’t constrain creativity; they protect it from operational friction. With templates, briefs, reusable components, and consistent processes, teams can:

-

Produce content faster

-

Maintain consistency across markets and channels

-

Reuse high-value assets instead of starting from zero

This turns collaboration into a strength rather than a tax. Work scales more predictably, and teams spend their time creating value, not coordinating around it.

Why shared workflows matter: speed, output, quality

Organizations that adopt shared workflows consistently outperform those relying on fragmented processes. When teams align around shared visibility, coordinated execution, and flexible collaboration, the operational gains compound.

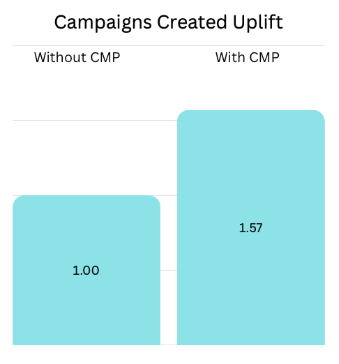

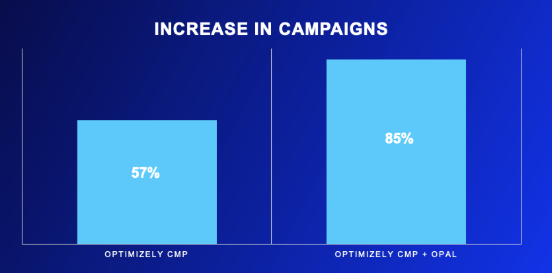

Organizations that unify workflows using the Optimizely Content Marketing Platform (CMP) increase campaign velocity by 57%, demonstrating how shared processes translate directly into higher throughput and more predictable delivery.

Shared workflows increase both speed and output because they:

-

Remove unnecessary handoffs

-

Reduce duplication and rework

-

Improve clarity around ownership and progress

-

Make it easier to reuse assets, templates, and insights

Organizing content for reuse

Most enterprises now manage thousands - often millions - of digital files spread across drives, legacy systems, regional folders, agency repositories, and tools that do not connect. As content volumes grow, this fragmentation becomes harder to manage and even harder to navigate.

Forbes reports that 60% of B2B content sits unused. Not because it lacks value, but because teams cannot find it, trust it, or identify the latest version.

Unstructured or poorly governed assets undermine efficiency across the content operation:

-

Teams cannot find what they need. Hours disappear searching, digging through folders, or asking colleagues to resend files.

-

Duplication becomes unavoidable. Without a single source of truth, teams recreate work that already exists, increasing both cost and inconsistency.

-

Brand and compliance risks rise. Outdated or conflicting versions circulate across regions and channels, and issues are often caught too late.

-

AI systems cannot use your content. Assets without metadata, structure, or clear relationships become invisible to generative models, limiting discoverability and reuse.

The effect is cumulative. Content libraries expand, but usefulness and efficiency declines. Even well-designed workflows cannot compensate for disorganized content, because the materials themselves are not prepared for reuse, governance, or AI-driven interpretation.

Why a DAM is essential for modern content operations

Once the scale and impact of scattered, unstructured assets becomes clear, most organizations reach the same inflection point: there is no way to move faster without bringing order to the content foundation. That begins with a disciplined system of record for every asset.

A Digital Asset Management (DAM) system provides the structure that fragmented libraries lack. Instead of assets living across tools, the DAM becomes the central, governed source of truth that supports every team and every channel.

A modern DAM ensures:

-

A single authoritative version of every asset.

-

Consistent metadata including titles, rights, product mapping, and lifecycle status.

-

Clear relationships between assets and their variations.

-

Governance controls that prevent duplication and maintain version clarity.

-

Schema alignment that makes assets usable across systems and channels.

This discipline matters because both humans and AI systems depend on clarity to make decisions. When generative models encounter:

- Duplicate content → diluted trust

- Outdated content → brand and compliance risk

- Untagged or inconsistently tagged content → invisible to AI

This means they cannot confidently interpret, reuse, or surface your assets. The same challenges apply to content teams navigating rapid updates, multiple markets, and growing volumes of work.

A well-governed DAM changes this entirely. Assets become discoverable, trustworthy, and ready for assembly across channels. Teams can reuse what already exists instead of rebuilding it. AI systems can understand and reference assets reliably, improving discoverability and enabling automation at scale.

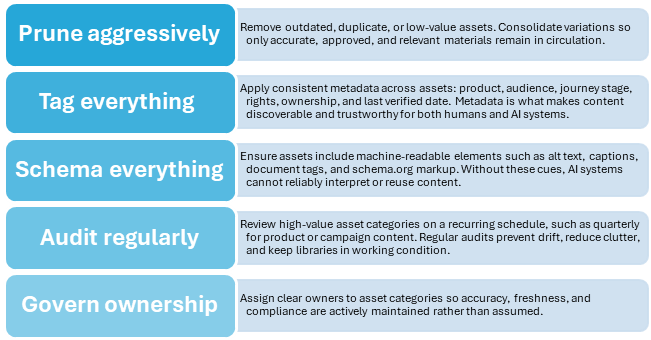

Principles for maintaining a high-quality asset library

Maintaining a well-structured, high-performing DAM is not a one-time project. It requires ongoing discipline to ensure assets remain usable, consistent, and ready for both human and AI consumption.

Five practices make the biggest impact:

Cleaning your content house may not feel glamorous, but it is foundational. AI systems cannot use what they cannot understand, and teams cannot reuse what they cannot find. A disciplined DAM turns content from a growing liability into an asset that compounds value across every channel.

Preparing assets for reuse across channels and AI systems

Once assets are structured, tagged, and governed, the next step is ensuring they can be reused efficiently across channels, teams, and AI-driven experiences. Reuse is where content operations gain real leverage. Instead of rebuilding assets for every campaign, market, or format, teams assemble high-quality experiences from trusted components that already exist.

For reuse to scale, assets must be:

Searchable - Teams and AI systems must be able to locate the right asset instantly. Metadata, schema, and controlled vocabularies make discovery reliable rather than guesswork.

Interpretable - AI models depend on semantic cues such as alt text, captions, tags, and structured fields to understand what an asset represents and when it should be retrieved.

Channel-ready - Reusable assets need formats, variants, rights information, and context that support deployment across web, mobile, social, paid media, product content, and emerging AI surfaces without rework.

Contextually connected - Relationships between assets, such as source files, localized versions, cropped variants, and campaign usage, must be clear so teams and AI systems know which version is authoritative.

When these conditions are met, reuse becomes natural. Production accelerates, duplication declines, and AI systems can assemble, recommend, and repurpose content with far greater accuracy.

Using AI Agents to keep asset libraries reusable

At enterprise scale, reuse breaks down for a simple reason: asset libraries grow faster than teams can govern them. AI agents help by taking on the repeatable work required to keep libraries clean, consistent, and reliable as volume grows.

AI agents can support asset readiness by:

-

Enriching metadata at scale (titles, descriptions, keywords, product mappings).

-

Adding semantic context (summaries, usage notes, intended audience, journey stage).

-

Generating channel-ready variants (crops, format adaptations, supporting fields).

-

Normalizing taxonomy so tagging stays consistent and search results stay predictable.

-

Supporting localization by translating or tailoring supporting fields while preserving the canonical asset.

-

Flagging governance risks early (duplicates, outdated versions, missing rights, inconsistent naming).

The result is a library that stays searchable, interpretable, and ready for reuse, even as asset volume increases.

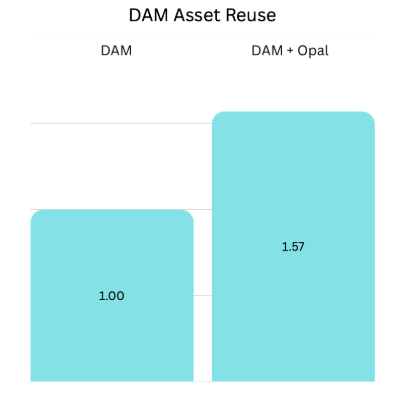

Using AI agents to clean, enrich, and govern assets increases median reuse by 57%.

Why reuse matters: operational and AI benefits

The difference between unmanaged content and governed, reusable assets is dramatic for both human workflows and AI-driven discovery.

A well-governed DAM dramatically increases asset reuse. While industry data suggests the average piece of content is reused fewer than 5 times (with Forrester noting up to 70% goes unused), Optimizely customers see a typical asset reused 46x, effectively reducing the cost-per-content-item by over 90%.

This level of reuse is only possible when assets are consistently structured, tagged, governed, and accessible across teams. It transforms content from a recurring cost into a compounding asset that improves both operational efficiency and AI readiness. When assets can be reliably found, trusted, and reused, every part of the content operation becomes faster, more consistent, and more scalable.

RAKBANK, a leading bank in the UAE, streamlined its entire content operation with Optimizely’s integrated DAM. By centralizing all brand-approved assets in one place and connecting DAM directly to their CMS, the marketing team eliminated duplication, accelerated updates, and repurposed assets across channels with ease. Smart renditions and in-DAM editing allowed them to take a single creative file and adapt it instantly, reducing production time and increasing asset reuse across campaigns. This, together with the larger Optimizely suite, increased engagement on their content by 37%, leads by 12%, and page views by 6%.

AI as a force multiplier for content operations

Assets and workflows give content operations stability. AI is what turns that stability into scale. When teams have clean structure, governed libraries, and shared ways of working, AI can take on the repeatable work that slows production and introduces inconsistency. The impact is clear:

Teams using improved workflows plus agentic AI with Optimizely Opal increase campaign volume by 85%.

This section focuses on three shifts that make AI scalable in content operations:

-

Specialized agents that accelerate research, ideation, drafting, enrichment, and QA while keeping humans in control of judgment.

-

Agent-powered workflows that orchestrate work end to end and remove the manual handoffs that create delays and inconsistency.

-

Governed prompt assets that are stored, versioned, owned, and maintained with expiry and compliance controls to ensure reuse at scale.

Using specialized agents for content creation

Specialized agents help teams scale content work without turning every request into a custom project. Instead of using one general AI prompt to do everything, teams deploy purpose-built agents for specific steps in the pipeline such as research, drafting, enrichment, and QA. This improves consistency and makes work easier to govern because each agent has a narrow job, clear inputs, and repeatable standards.

A practical rule: use agents for repeatable execution and pattern work. Use humans for strategy, final judgment, and brand-defining decisions.

Where specialized agents create the most value

|

Agent category |

Best used for |

Outputs to expect |

Example agent patterns |

|

Research and insight agents |

Synthesizing inputs that normally require time across tools and stakeholders |

Structured brief, key claims, supporting evidence, open questions to validate |

Competitive Insights, Industry Marketer |

|

Ideation and variation agents |

Expanding options quickly and generating controlled variations against a single brief |

Angles, headlines, outlines, testable variants tied to a goal |

Ideation, Variation development |

|

Drafting and production agents |

Producing first drafts and channel adaptations, with humans editing for positioning, voice, accuracy |

Draft plus reusable snippets by channel |

Email creation, Keyword-driven copy |

|

Enrichment and structure agents |

Converting messy inputs into structured, reusable building blocks |

Modular content blocks (FAQs, summaries, metadata), content models ready for reuse |

Content model creation |

|

Quality and compliance agents |

Running repeatable checks humans often skip under pressure |

Pass/fail flags, issues found, fix recommendations |

Web accessibility evaluation |

|

Performance and optimization agents |

Turning performance signals into clear actions for stakeholders |

What changed, why it matters, what to do next |

Traffic analysis, Heatmap analysis, Chart summary |

When to use specialized agents vs. human-led work

Specialized agents deliver the most value when work is repeatable and the goal is speed with consistency. They should take on execution steps that can be standardised and reviewed quickly, while humans remain accountable for strategy, judgment, and risk. Use the checklist below to decide where agents should lead and where human oversight must stay in control.

|

Use agents when the work is… |

Keep it human-led when the work requires… |

|

Repeatable execution (research synthesis, first drafts, enrichment, tagging, QA checks) |

Strategy, prioritization, and tradeoffs |

|

High-volume or time-sensitive (many pages, many variants, many markets) |

Positioning, messaging, and narrative judgment |

|

Format-driven (FAQs, summaries, metadata, templates, variations) |

Sensitive claims, compliance decisions, legal risk |

|

Rules-based and easy to validate (standards checks, accessibility checks, policy checks) |

Final editorial approval and accountability |

|

Producing a strong starting point (briefs, outlines, draft packages) |

Creative direction and brand-defining work |

Rule of thumb: agents accelerate execution, humans approve the decisions that carry risk.

Impact of specialized agents on speed and quality

Specialized agents increase output by compressing the slowest parts of production without lowering standards. They take on repeatable execution such as gathering inputs, drafting first versions, reformatting for channels, and running consistent checks. Because outputs are standardized (briefs, drafts, metadata, QA reports), teams spend less time reworking and coordinating and more time refining and publishing.

Quality holds because the baseline becomes more consistent. Humans stay accountable for positioning, accuracy, and final approval, but they start from stronger inputs rather than rebuilding the same work repeatedly.

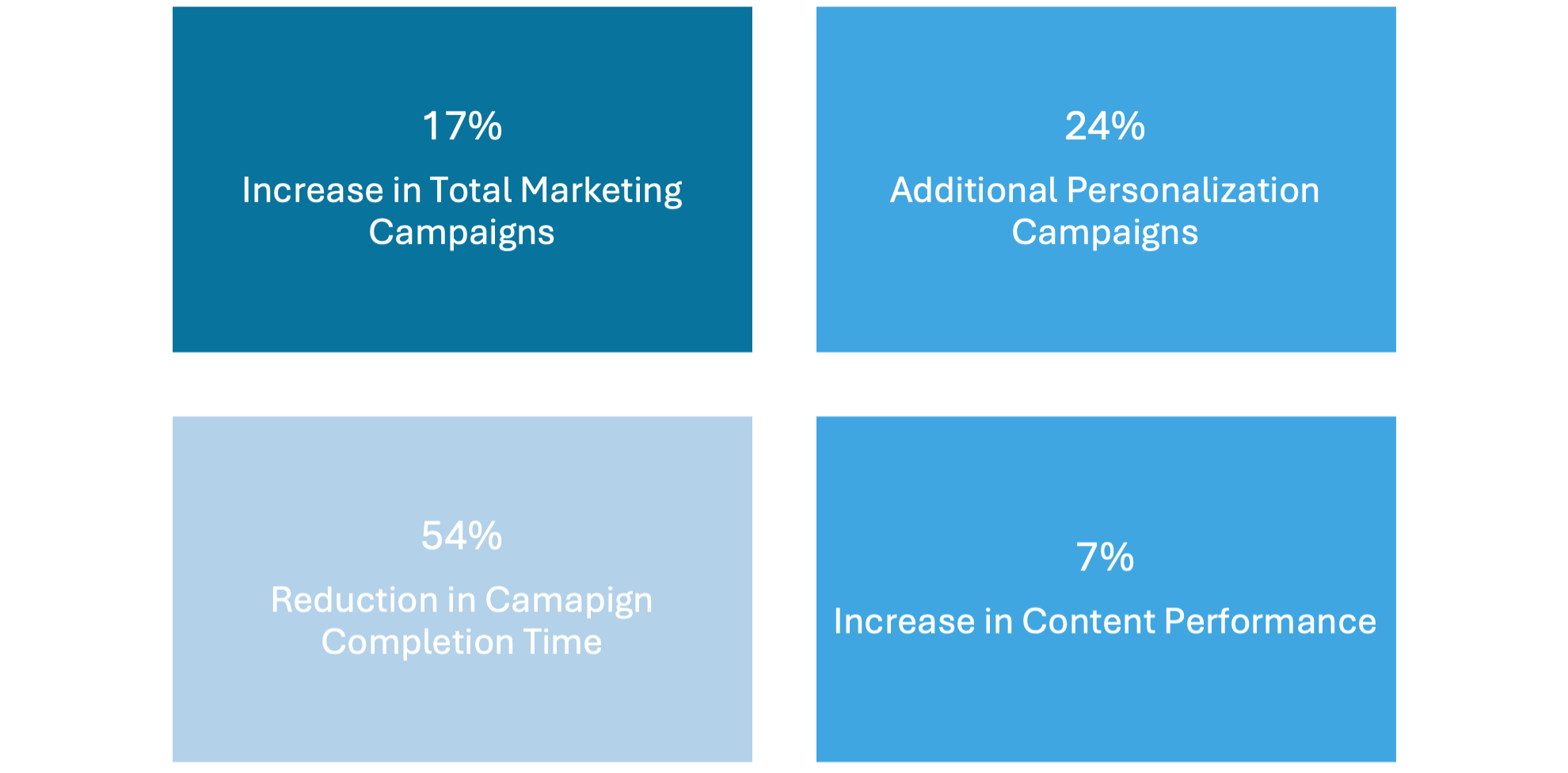

In Optimizely’s Opal AI Benchmark Report, teams saw higher output, improved performance, and faster delivery at the same time:

Together, these results show the role of agents in content operations: more shipped work, fewer delays, and more consistent quality control through repeatable checks and human oversight.

A global business services company adopted Optimizely Opal to standardize campaign production and reduce manual coordination. The result was 71% more campaigns, alongside a 36% reduction in campaign cycle time, driven by replacing repetitive steps with agent-supported workflows that kept execution consistent from brief to launch.

Designing workflows powered by AI agents

Agentic workflows move AI from “helping with a task” to running a repeatable process. Instead of re-briefing an assistant each time and stitching outputs together manually, teams define a workflow that coordinates steps, carries shared context, and delivers a consistent result every time it runs.

In practice, an agentic workflow connects specialized agents across the pipeline, such as research, briefing, drafting, enrichment, and QA, and manages how work moves between them. It can run steps in sequence when one depends on the last, or in parallel when work can be completed independently, then consolidate outputs into a single publish-ready package.

Because every step runs against the same brief, brand rules, prompts, and required standards, teams reduce handoffs, cut coordination overhead, and increase throughput without losing consistency or control.

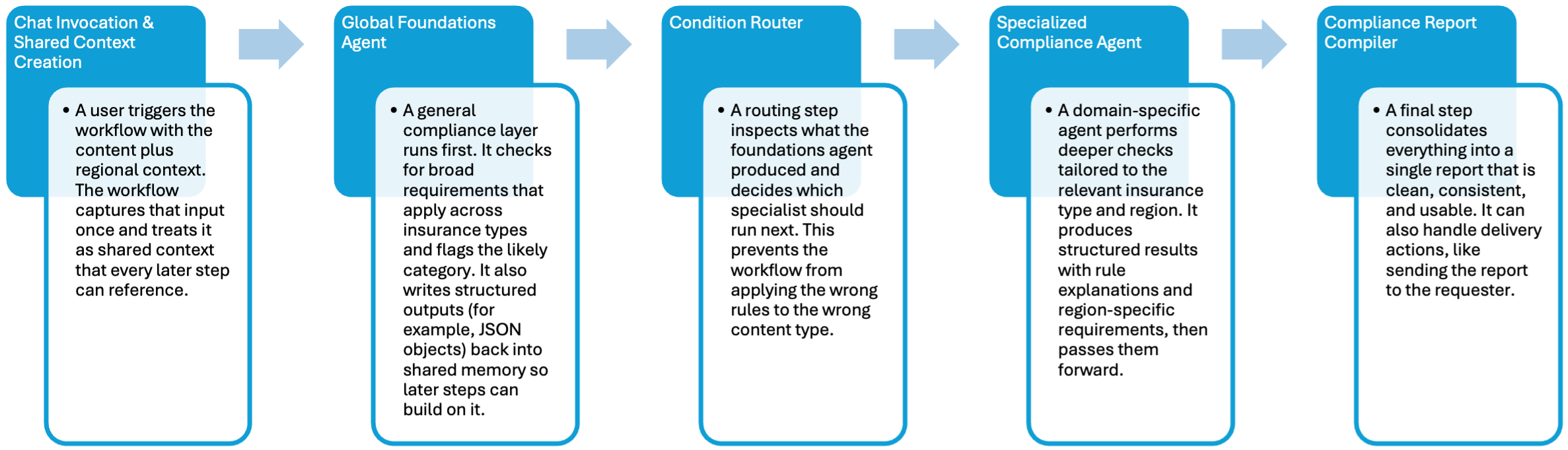

Agentic workflow example: compliance review

In most organizations, compliance sits outside the day-to-day content workflow. Reviews happen late, often after content has already been drafted, designed, localized, or assembled for publishing. This timing creates predictable inefficiency, introducing waiting, rework, and late-stage changes that slow the entire pipeline.

Compliance is also well suited to AI because it is rules-based, repeatable, and high-risk when errors slip through. But it is rarely a single check. It requires multiple steps, specialist logic, and consistent handoffs. That makes it ideal for an agentic workflow that coordinates the process end to end rather than treating compliance as a one-off task.

The workflow below shows the five stages that turn a complex review into a repeatable sequence.

Why this workflow works

-

Shared context prevents drift. Every agent is anchored to the same inputs and constraints, keeping decisions consistent from start to finish.

-

Global first, then specialized reduces errors. Baseline rules catch common issues first, then specialist checks handle regional and domain nuance.

-

Routing avoids wasted work. The workflow runs only the checks that match the content type and region, instead of applying broad rules everywhere.

-

Compilation creates a usable deliverable. Outputs are consolidated into one clear report so teams do not have to stitch together fragments.

Human oversight for agentic workflows

Human oversight is how organizations keep agentic workflows fast and reliable without losing control. It is the set of checkpoints that determines when agents can run independently, when work must pause for review, and how exceptions are handled. As more of the content pipeline becomes automated, this oversight is what protects accuracy, brand integrity, and compliance while still preserving speed and scale.

Four common oversight models describe how humans and agents work together:

-

Agent-assisted - Agents accelerate execution while humans retain control of decisions and final outputs. Best for drafting, summarising, enrichment suggestions, and early QA signals.

-

Human-in-the-loop - Agents complete a step, then pause for review or approval before continuing. Best for regulated content, brand-critical messaging, and higher-risk claims.

-

Human-on-the-loop - Agents run autonomously with monitoring and intervention only when thresholds are breached or flags appear. Best when most work is routine but exceptions matter.

-

Human-out-of-the-loop - Agents run end to end without intervention. Best for low-risk, highly repeatable tasks like metadata updates, tagging, internal categorisation, and routine reporting.

In practice, mature teams mix these models across a workflow, matching oversight to risk and increasing automation as standards, guardrails, and confidence improve.

Changing the economics of growth

Agentic workflows change what limits marketing scale. For many teams, growth is no longer constrained by ideas or ambition, but by the operational cost of moving content from request to publish. Most teams try to scale by adding headcount, adding unnecessary fragmented tools, or pushing people to work faster, but that breaks as volume increases. The real slowdown is coordination: re-briefing, handoffs, reviews, context loss, and late-stage rework.

When AI is embedded into repeatable workflows, that coordination tax drops. Context and standards carry through every step, with escalation to humans only when judgment, risk, or final approval is required.

What this unlocks

-

Faster cycle times by reducing handoffs, re-briefing, and back-and-forth clarification.

-

More consistent quality across teams, regions, and channels because the same standards run every time.

-

Less rework and fewer late-stage surprises through earlier checks and clearer traceability.

-

Higher scalability as volume increases without proportional increases in coordination effort.

-

Stronger reuse of work and institutional knowledge because processes and outputs become repeatable, not reinvented.

A large insurance company used Optimizely’s agentic compliance review workflow to automate early compliance checks and cut late-stage rework. Compliance reviews completed more than doubled (+137%) while turnaround time per review fell by 73%, speeding handoffs across the pipeline. The result was faster delivery without sacrificing oversight, because issues were caught earlier and resolved consistently instead of stalling content just before publishing.

Treating prompts as governed content assets

AI output is only as strong as the instructions and context behind it. Prompts provide this guidance by defining what a model should produce and how it should behave. They shape how the task is interpreted, which sources are trusted, what tone and structure are used, and which constraints ensure accuracy and compliance.

This is why prompt quality is an operational concern. The strongest prompts make intent clear, set constraints, provide examples of what “good” looks like, anchor claims to approved sources, and define success criteria. When those elements are present, outputs become more consistent, easier to review, and far more reliable to reuse across teams and workflows.

A simple way to standardize prompt quality is to use a repeatable structure. One example is the RACE framework, which helps teams create prompts that produce predictable outputs across use cases.

|

Role |

Define who the AI should act as. |

You are a marketing strategist specializing in customer segmentation |

|

Action |

Explain the task you want completed. |

Develop a customer segmentation strategy |

|

Context |

Provide the information needed to make the output relevant. |

The company sells premium fitness equipment online, targeting health-conscious consumers in the UK. The main goals are to increase customer loyalty and improve targeted marketing for personalized email campaigns |

|

Expectations |

Set format and quality guidelines. |

The strategy should segment customers based on demographics (age, income, location), purchase behaviour, and engagement levels. Include 3-4 customer segments with details on each segment's characteristics, marketing messages that will resonate with them, and preferred communication channels |

RACE works because it makes prompts structured and comparable, which is essential when many teams need to generate content against shared standards rather than individual improvisation.

The organizational problem

Prompts are now part of how content gets produced, but most organizations still treat them as disposable. They are created ad hoc across teams and tools, with no shared standard for what “good” looks like. The result is inconsistent outputs, variable quality, and avoidable rework even when teams are solving the same problem.

This creates two compounding risks. Prompt knowledge gets lost, with the best instructions trapped in Slack threads, personal docs, or individual habits, so teams rebuild proven prompts from scratch. Prompts also drift. As brand guidance, products, and regulations change, old prompts quietly produce off-brand tone, inconsistent structure, or missing required language, often discovered only late in the review cycle.

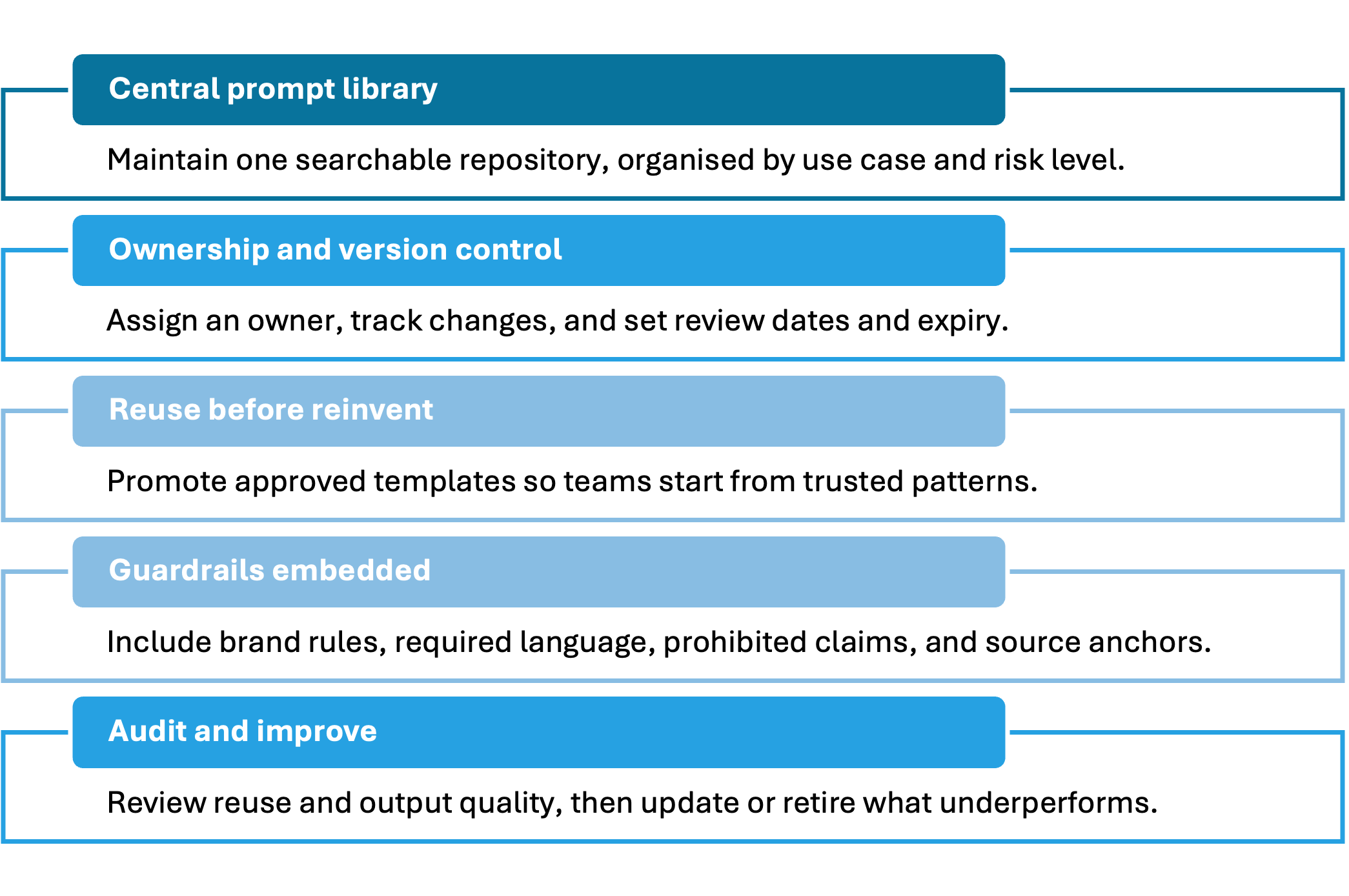

If prompts shape quality, consistency, and compliance, they cannot remain informal. They need to be managed like any other critical content asset with ownership, versioning, review, and lifecycle controls.

The shift: treat prompts as content assets

Prompts are now operational infrastructure. Without governance, they steer off brand, fall out of date, and force teams to recreate instructions that already exist. Treat prompts like any other high-impact content asset: store them in a shared library, standardize templates for repeatable use cases, and manage them with clear ownership, version control, review, approval, and lifecycle controls such as expiry. Prompts should be anchored to the same inputs that protect quality at scale, including brand guidance, policies, and approved source content.

Making prompt quality repeatable

A shared prompt library with clear ownership, version control, and review or expiry cycles ensures that what scales is best practice. At this level of adoption, governed prompts are the difference between AI accelerating the organization and AI amplifying inconsistency.

Although formal prompt libraries are still emerging for most enterprises, early behaviors inside Optimizely show what happens when prompts are easy to access and reuse. Across our customer base, this has already created a large-scale, living prompt library:

When prompts are treated as shared assets, teams stop reinventing and start compounding.

A business services company treated prompts as governed assets by standardizing prompt templates and centralizing them in a shared library with clear ownership. In a single month, teams created 53 custom agents, shifting AI use from ad hoc prompting to repeatable workflows that produce consistent outputs across teams.

Unlocking the impact of unified content architecture

Teams are working to solve structure, workflows, and AI. What still holds them back is the seam between systems.

In most enterprises, content moves from plan to publish through exports, copy-paste handoffs, and asset libraries that drift out of date. Each step strips context, slows feedback, and forces teams to recreate work that should be reusable.

AI makes those seams more expensive. It performs reliably when workflows are coordinated and assets are governed with clear structure and standards. Without that foundation, outputs drift, teams spend time re-briefing and correcting inconsistencies, and automation creates churn instead of momentum.

A unified architecture removes those breaks by sharing structure, metadata, and governance across the stack. In practice, this means:

-

When content changes, it updates everywhere

-

When performance insights surface, they reach creators immediately

-

When new channels or formats emerge, content adapts without reinvention

-

When AI crawls your site, it finds structured, authoritative, machine-readable content

The compounding effect of unified architecture

The biggest gains appear when shared workflows are embedded directly into content delivery. When planning, collaboration, and production sit alongside publishing and optimization, teams stop losing time and context at handoffs. Standards stay consistent, feedback loops shorten, and improvements reach live experiences faster. The result is better-performing content, because the same brief, structure, and quality controls carry through from request to publish.

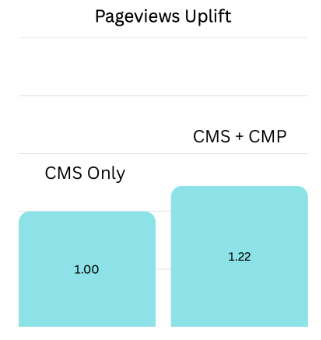

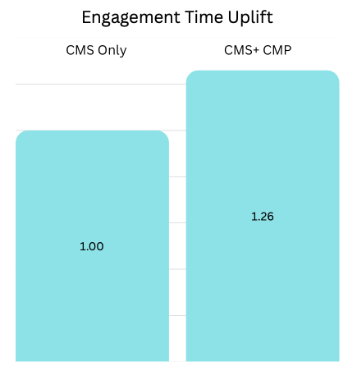

Across customers, teams with integrated workflows and delivery see 22% more pageviews versus a CMS-only baseline, alongside 26% higher engagement time.

The takeaway is simple: when workflows live where content is delivered, performance improves because quality compounds and iteration accelerates, not because teams simply produce more.

Closing thoughts

In the AI era, content performance is increasingly determined by the system behind it. Visibility is no longer won purely through better copy or more output, but through content that is structured for interpretation, organized for reuse, and governed for consistency across teams, channels, and AI-driven discovery. When those foundations are in place, workflows move faster, collaboration becomes easier, and AI agents can scale execution without sacrificing quality or control.

The next step is to connect these capabilities into a unified operating model. Organizations that pull structure, shared workflows, and governed AI inputs into one connected system will compound improvements over time, turning every piece of content into a reusable asset, every workflow into a repeatable engine, and every performance signal into a faster feedback loop.

Build the system that makes content scale.

Content Marketing Institute. Enterprise Content Marketing Benchmarks, Budgets, and Trends. 2025.

Demand Exchange. 4 Key Insights from the 2024 State of B2B Content Marketing. 2024.

Fast Company. The Rising Problem of Rogue Content. 2024.

CXOTrail. New UK Study Confirms AI Overviews Appear on 42% of Google Searches. 2024.

WP SEO AI. How Do Google AI Overviews Change Search Results? 2024.

Bain & Company. Consumer Reliance on AI Search Results Signals a New Era of Marketing. 2024.

BrightEdge. One Year of Google AI Overviews: BrightEdge Data Reveals Changes in Search Usage. 2024.

Cloudflare. Cloudflare Radar: AI Insights. 2024.

Content Marketing Institute. Enterprise Content Marketing Benchmarks, Budgets, and Trends. 2024.

Gartner. CMO Spend Survey 2024: Budget Allocation, Priorities, and Challenges. 2024.

Forrester. Global Marketing Operations Survey. 2024.

Forbes. 60% of B2B Content Sits Unused: Here’s the Fix. 2016.

Optimizely. The 2025 Optimizely Opal AI Benchmark Report. 2025.

Analysis of anonymized Optimizely customer data measuring average crawl-to-refer ratios across AI answer engines between June and August 2025.

Analysis of anonymized Optimizely CMS customer data from January to June 2025.

Analysis of total AI crawl requests across all Optimizely customers between June and August 2025.

This improvement was calculated by comparing crawl-to-refer ratios for customers actively using GEO agents with those not using GEO agents.

Annual page view growth was calculated using a compound annual growth rate (CAGR) method, comparing first-year and last-year page view totals for customers segmented by Opal and GEO optimization usage.

Analysis of Optimizely customer campaign velocity, comparing the number of campaigns created in their first-year versus their most recent year.

DAM asset reuse was analyzed by comparing reuse rates for Opal-enabled customers and customers not using Opal.

CMP campaign growth was calculated by comparing campaign volumes from a customer’s first year to their most recent year, segmented by Opal enablement.

Customer agent usage was analyzed using Optimizely tracking data from September 2025 onward.

Page-view and engagement-time uplifts were calculated by comparing customers using CMS only with those using both CMS and CMP.