A/B Testing All-Star: Jason from Avalara

Cara Harshman

Name: Jason Burt

Company: Avalara

Role: Web Marketing Analyst

Fun fact about sales tax: Even if I came up with one, the law changes so much that it would probably be different tomorrow.

Jason says, “testing has dramatically changed our company culture as far as decision making goes. The conversation used to be, ‘this is the way the site is going to be.’ Now the conversation goes, ‘here are some ideas to test.'”

Optimizely: What’s your testing process like?

Jason: Since we started testing in December, I feel like our process has been about the same. My background is in physics and economics so I’m really used to the scientific method and working with statistical sample sizes. We try to test everything as much as possible. We’ll run tests on pages that receive the most traffic. If we find any UI improvements, we’ll implement them across all the pages that don’t get as much traffic. Likewise, if we see certain photos or elements that have high conversions on our site, we’ll test them again in an email. Testing has dramatically changed our company culture as far as decision making goes. The conversation used to be, “this is the way the site is going to be.” Now the conversation goes, “here are some ideas to test.”

How often do you run experiments?

Every few weeks we start a new test, we aim for two weeks minimum. We try to test everything and it starts when we come together and have idea for something we want to test. I’m a purist, I only do 50/50, A or B, not multivariate. Testing is a continual process. There are a lot of cool things going on in behavioral economics and UI right now. So it’s a great time to be getting into testing.

What were some of the initial tests you ran on avalara.com?

Our site is orange, we love orange, but at the same time we want to balance it properly with other colors to create contrast to make the user experience better. Working within our color palette, we ran A/B tests with a number of color combinations on call to action buttons. We tested reactions to red, green, orange, and blue. We ended up going with a blue button because it significantly improved conversions by 20%. At year end, just that button being blue is going to probably be a couple thousand more dollars in revenue.

Which tools have been most effective for your A/B testing?

The cross browser feature makes our lives much easier. I can see how our pages load across all different browsers and immediately make changes if there’s something loading incorrectly.

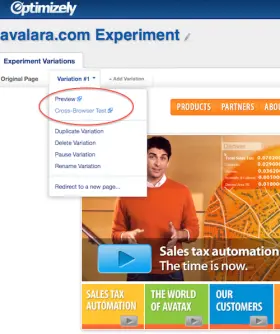

In Optimizely’s experiment dashboard, select “Cross-Browser Test” to see how the experiment looks across multiple browsers.

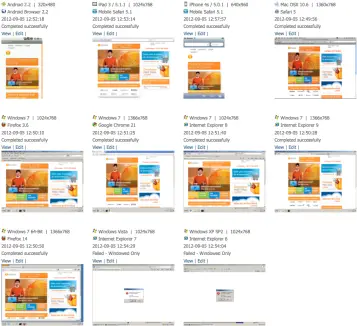

Then, you can see preview thumbnails of your site in each browser, including mobile and tablet browsers.

Most companies still browse with IE. One week, something on our site was loading improperly in certain versions of IE and it played into our conversion rates. Though it might appear to be minor, by year end that could be a 2-5% drop in business – that’s very significant. That’s one of the reasons why Optimizely is awesome. We are constantly testing our loads and can quickly see if something is testing correctly; you can do it all in one spot.

Case Study: Minute surprises in requesting “business email” versus “email”

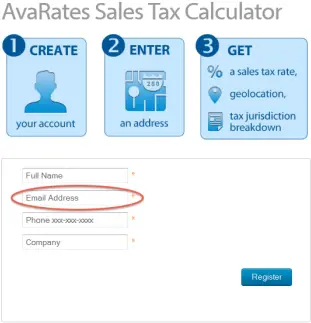

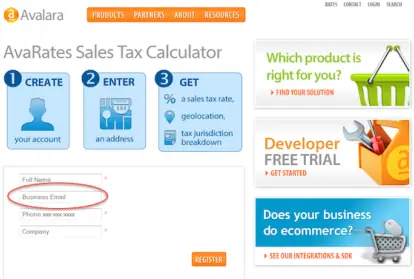

Background: An external firm had mentioned changing an input field form prompt from “Email” to “Business Email.” The thought was, that this change would increase the number of domain specific registrations that we receive.

Goal: We wanted to test whether or not there was a higher return of domain specific emails, like a business email. If you get that domain specific email you get more information back, like the company name, industry and such.

Hypothesis: I didn’t think that the “business email” variation would have that large of an impact. Maybe a CTA would, but just changing the type of email, that was bizarre to me. It’s so minute.

Solution: With Optimizely I changed the text on the placeholder in an input form field and tested the new variation against the original.

Original:

Variant:

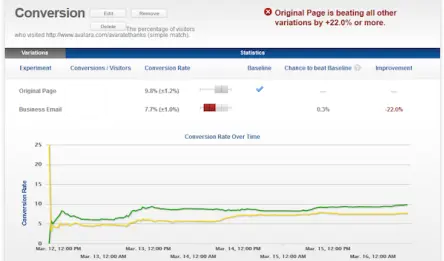

Results:

When we examined the results, we saw that the conversion rate with “business email” dropped 0.6%.

Conclusions: Just this one word in an input field could be a couple hundred thousand dollars at the end of the year. We’re a SaaS company so we know while conversions are nice for lead generation, deals are what matter at the end of the day. It turns out that receiving the extra data with a business email gave no improvement to email quality. Ultimately it was not worth it to make this change. With testing we are able to check which recommendations from outside firms are helping or hurting our business.