Berechnung der Stichprobengröße von a/b-Tests und Validierung der Ergebnisse

Der Aufbau einer Experimentierkultur hat das Potenzial, Ihre Kundenerfahrungen zu vereinfachen und die Konversionsrate zu erhöhen...

Es kann Ihnen jedoch auch schaden, wenn Sie keine statistisch signifikanten Ergebnisse erzielen können.

So benötigen Sie beispielsweise eine angemessene Stichprobengröße, um einen Test durchzuführen. Als Nächstes müssen Sie diese Tests über einen ausreichend langen Zeitraum durchführen, um statistisch signifikante Ergebnisse zu erzielen.

Bei der Durchführung von Experimenten und A/B-Tests ist es besser, einen Test erst dann zu beenden, wenn die Variationen Signifikanz erreichen. Wenn einige der Variationen keine Signifikanz erreicht haben, sollten Sie entscheiden, ob Sie warten wollen, bis die Zahl der Besucher steigt oder eine größere Stichprobe vorliegt.

Schneller geht es, wenn Sie unseren A/B-Test-Stichprobengrößenrechner und die Stats Engine verwenden.

In diesem Artikel erfahren Sie, wie Sie die Dauer des Experiments im Voraus abschätzen, die Ergebnisse anhand von Daten messen und berechnen können, wie viel Traffic Sie für Ihre Conversion Rate-Experimente benötigen.

Erforderliche Stichprobengröße und Zeitrahmen für A/B-Tests

Um einen eindeutigen Gewinner zwischen den verschiedenen Varianten in einer Testgruppe zu ermitteln, müssen Sie genügend Tests mit einer Mindeststichprobengröße oder einer bestimmten Anzahl von Personen durchführen. Sobald Sie die Ergebnisse haben, überprüfen Sie, ob es einen statistisch signifikanten Unterschied anstelle einer Nullhypothese gibt. Wenn Sie z. B. die Überschrift einer Landing Page testen möchten, kann es einige Wochen dauern, bis Ergebnisse vorliegen. Stellen Sie sich einen ähnlichen Zeitrahmen für Ihre Blog-Engine vor.

Es hängt alles von Ihrem Unternehmen, der Stichprobengröße, dem Tool, das Sie für A/B-Tests verwenden, und vielem mehr ab. Wenn Sie eine kleine Liste haben, müssen Sie den größten Teil davon A/B-Tests unterziehen, um ein Signifikanzniveau zu erreichen.

So berechnen Sie die Stichprobengröße

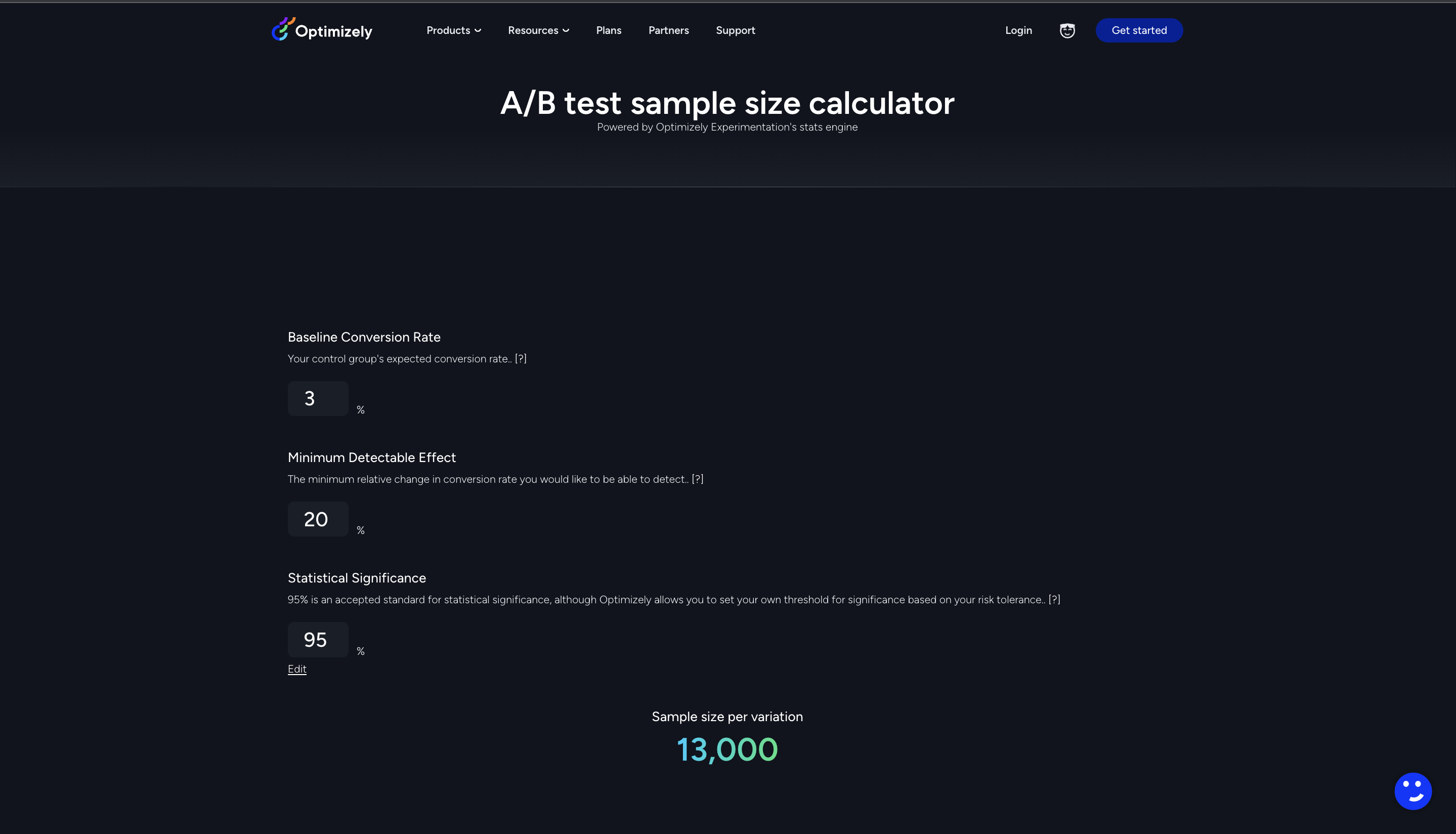

Wenn Sie sich fragen, wie Sie die Stichprobengröße berechnen können, verwenden Sie am besten Kennzahlen wie die Baseline-Konversionsrate (die erwartete Konversionsrate Ihrer Kontrollgruppe) und den minimalen nachweisbaren Effekt (mde), um die Stichprobengröße für Ihr Original und Ihre Variation zu ermitteln, damit Sie die statistischen Ziele erreichen.

Die Werte, die Sie in den Stichprobengrößenrechner für ab test eingeben, sind für jedes Experiment und jedes Ziel einzigartig. Mit der Zeit werden mehr Besucher kommen, auf Ihre Variationen stoßen und konvertieren. Jetzt werden Sie feststellen, dass die statistische Signifikanz zunimmt und Sie eine genaue Schätzung der Testdauer erhalten.

Im Folgenden finden Sie zwei Berechnungen, die Ihnen helfen, die Stichprobengröße in die geschätzte Anzahl von Tagen umzurechnen, die Sie für die Durchführung eines Experiments benötigen:

#Berechnung 1

Gesamtzahl der benötigten Besucher = Stichprobengröße × Anzahl der Variationen in Ihrem Experiment

#Berechnung 2

Geschätzte Anzahl der Tage für die Durchführung des Experiments = Gesamtzahl der benötigten Besucher ÷ durchschnittliche Anzahl der Besucher pro Tag

Das gewünschte Ergebnis ist nicht mehr weit entfernt. Der Stichprobengrößenrechner von Optimizely liefert in Sekundenschnelle genaue Ergebnisse. Benutzen Sie ihn und er zeigt Ihnen Ihre Stichprobengröße an - so sieht sie aus:

Und das Beste daran?

Sie müssen sich nicht ausschließlich auf die Berechnung des Stichprobenumfangs verlassen, um die Gültigkeit Ihrer Ergebnisse nachzuweisen. Verwenden Sie es für die Planungsphase. Für den Rest haben wir die Stats Engine.

Was ist Stats Engine?

Wenn Sie sich fragen, was einen guten Test ausmacht, dann ist Spekulation nicht die Antwort. Wenn Sie sich auf eine Vermutung stützen, kann Ihre Fehlerquote auf über 30 % ansteigen.

Geschwindigkeit und Skalierung wirken sich auf Ihre digitalen Erfahrungen aus, wenn sie datengesteuert sind und auf der Genauigkeit der Ergebnisse basieren. Hier kann Ihnen eine Statistik-Engine helfen. Mit einem sequenziellen Testansatz können Sie Probleme beim Raten beseitigen.

Sie misst die Standardabweichung in Ihrem Prozess und hilft Ihnen, Ihr Unternehmen datengestützt zu verändern, damit Sie schneller Entscheidungen treffen und eine Kultur des Experimentierens aufbauen können. Hier sind weitere Vorteile:

Sie können die Ergebnisse in Echtzeit überwachen, um schnell datengestützte Entscheidungen zu treffen, ohne die Integrität der Daten zu beeinträchtigen.

Die statistische Aussagekraft eines sequenziellen Tests nimmt mit fortschreitender Testdauer natürlich zu, wodurch Hypothesentests und willkürliche Schätzungen der Effektgrößen überflüssig werden.

Sie können sich automatisch an die tatsächliche Effektgröße anpassen und den Test bei größeren als den erwarteten Effektgrößen vorzeitig beenden, wodurch Sie im Durchschnitt schneller zur Signifikanz gelangen.

Sie können die statistische Wahrscheinlichkeit klar erkennen, dass die Verbesserung auf die von Ihnen vorgenommenen Änderungen zurückzuführen ist und nicht auf einen Zufall. Die Wahl des richtigen Signifikanzniveaus ist also wichtig, da es das Vertrauen in Ihre A/B-Testmethoden erhöht. Das Konfidenzintervall für die Verbesserung muss sich von Null wegbewegen, damit ein Experiment den Status der Signifikanz erreichen kann.

Hier können Sie sich das vollständige Whitepaper ansehen, um zu erfahren, wie Experimente mit einer Statistik-Engine ablaufen.

Und schließlich...

Es ist nicht einfach, Ihre Experimente im Auge zu behalten und zu prüfen, ob sie über genügend Daten verfügen, um ein schlüssiges Ergebnis zu erzielen. Dieses massive Problem kann mit Optimizely behoben werden.

Unsere Statistik-Engine erreicht eine Potenz von eins, so dass Ihre Testergebnisse immer Daten vorweisen können. Nutzen Sie es, um Ihre digitalen Marketingpläne schnell zu ändern und sich auf Conversion Rate Optimization (CRO) zu konzentrieren.

Wenn Sie Ihre AB-Tests besser verstehen und moderne E-Commerce-Erlebnisse liefern möchten, sollten Sie sich dieses große Buch der Experimente ansehen. Es ist eine Art Leitfaden für AB-Tests und enthält echte Geschichten von Unternehmen, die vom Aufbau einer Experimentierkultur profitiert haben.

- Zuletzt geändert: 27.04.2025 03:47:50