How to choose an experimentation platform

And connect today's tests to tomorrow's revenue

You're shopping for an experimentation platform. Feature lists are overwhelming. Every demo looks impressive.

Most teams focus on the wrong things. They compare features instead of asking whether their organization can handle what the platform will reveal.

This guide shows you what actually matters when choosing an experimentation platform—the questions that predict success, the pitfalls that guarantee failure, and the uncomfortable truths vendors won't tell you.

Most teams think they're buying an A/B testing tool...

What you're actually buying is your organization's decision-making infrastructure.

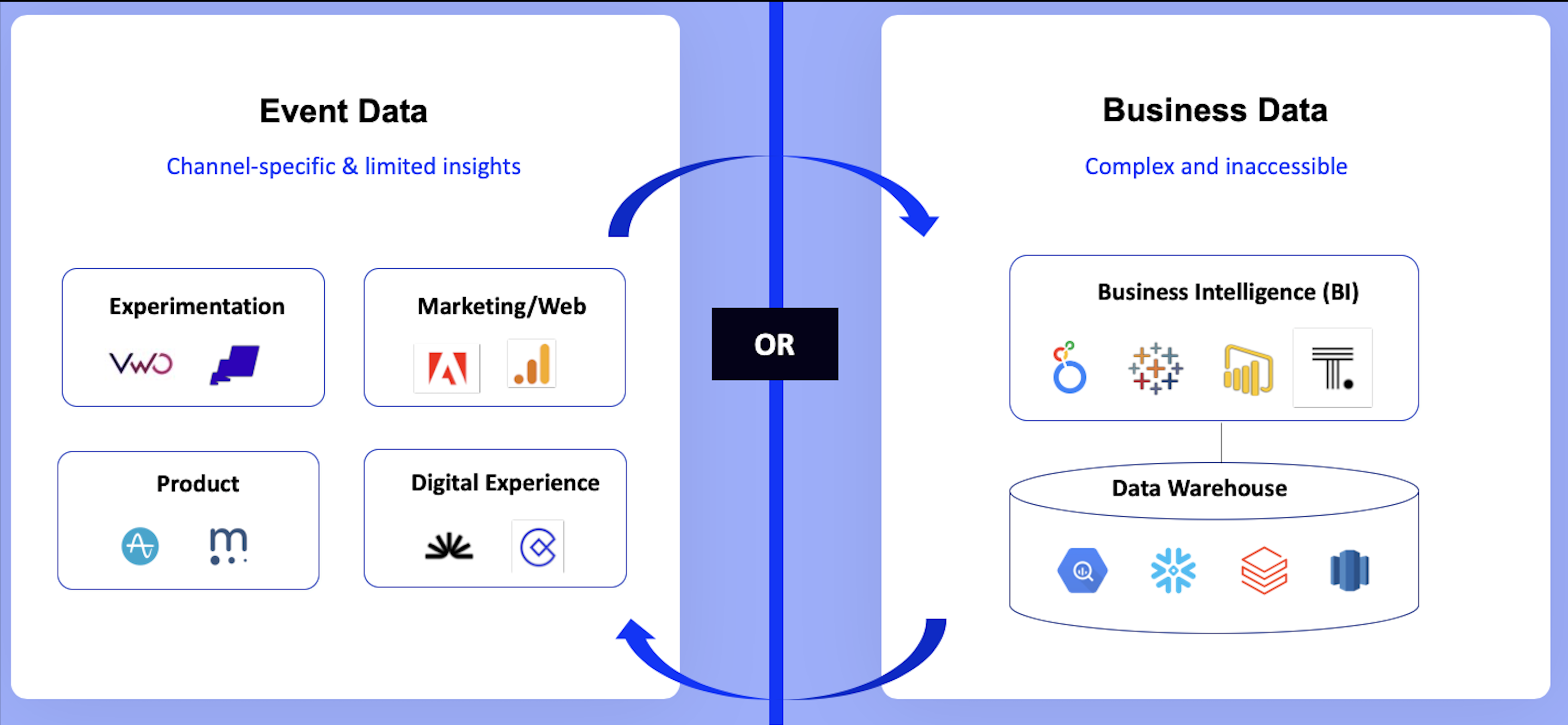

Old way: Disconnected tools for web experimentation, feature experimentation, and personalization = siloed insights that don't tie to revenue.

Image source: Optimizely

New way: One integrated platform with warehouse-native analytics, omnichannel testing, and AI= clear ROI from every experiment.

Your customers don't experience your website, mobile app, and email campaigns as separate experiences. They have one continuous experience with your brand.

Your experimentation platform should match that reality, not force you to stitch together insights from disconnected tools.

What to look for in an A/B testing and experimentation tool

1. Can you connect experiment data to business outcomes?

A/B testing today is multiplayer. You need to measure things that are relevant for revenue and actually can make a difference to your business.

For example, Marketing wants to know what worked. Product wants to know what behavior shifted. Leadership wants to know if it’s worth rolling out.

If they can’t see the thinking behind the test, they fill in the blanks themselves.

It means:

- Success gets defined by the wrong metric.

- Insights don’t travel.

The next test starts from zero. And you can never connect data with business outcomes

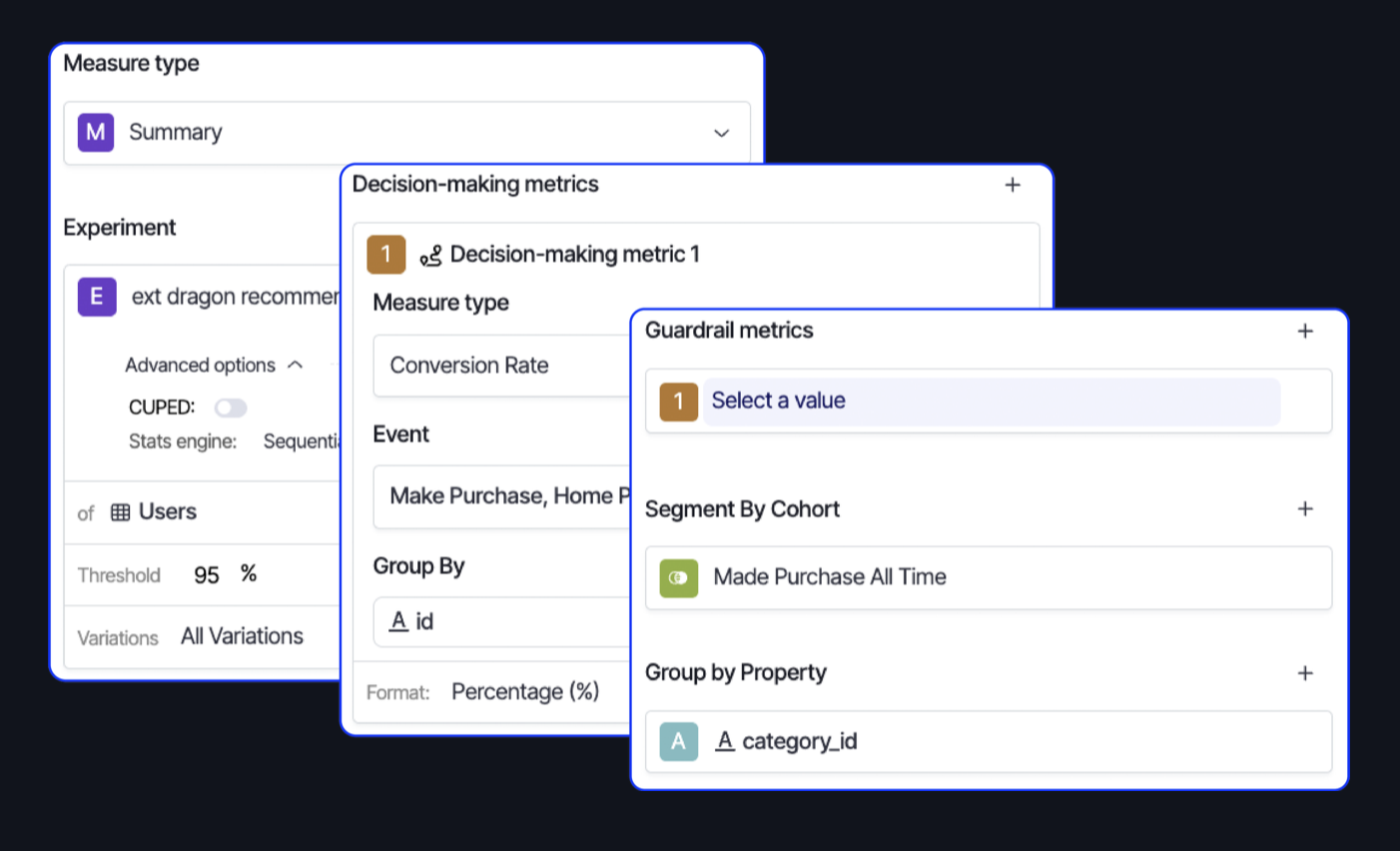

With warehouse-native solutions like Optimizely Analytics, you can track any metric in any experiment, regardless of how the metric was recorded.

Image source: Optimizely

Image source: Optimizely

Data stays in your warehouse (Snowflake, BigQuery, Databricks, Redshift), and results automatically include business context.

Ask yourself: Can I track any experimentation metric like revenue, retention, churn directly in my experiments without exporting data?

Any event that exists can now be tied to an experiment, even if it isn't tracked on an app or website. See how experimentation analytics work in your warehouse.

See it work:

Improvement in experimentation program's health in a single quarter

Cox Automotive's team was spending weeks analyzing experiments across 10+ brands like Kelley Blue Book and Autotrader. After moving to Optimizely, their experimentation program health score improved by 27% in a single quarter.

Sabrina Ho

Sr. Director of Product Analytics , Cox Automotive

Also, here's an honest comparison to help you choose the best warehouse-native experimentation platform for your team - even if that's not us.

2. Can you prove your program’s ROI, not just celebrate A/B test wins?

Engagement metrics are leading indicators that may or may not predict business value. Higher click-through rates don't guarantee increased revenue.

Many testing tools are limited to events that happen immediately during the user session. However, customer retention metrics like customer lifetime value, subscription renewals, and support costs occur days, weeks, or months later.

Look for platforms that can track any business metric, not just web interactions.

The ability to measure revenue, retention, customer satisfaction, and other business outcomes directly within your experiments is crucial for proving ROI.

Ask yourself: Can this platform measure outcomes weeks or months after the initial interaction?

For example, With Optimizely you can automatically includes Days Run, Total Visitors, and Page Targeted alongside performance metrics.

Image source: Optimizely

See it work:

Decrease in the return rates

Brooks Running customers were ordering multiple sizes of the same shoe model, knowing one would be returned. By combining personalized sizing recommendations with their business data, Brooks achieved an 80% decrease in return rates.

3. Can you scale your experimentation program across teams while maintaining statistical rigor

As programs grow, different teams need different capabilities. Engineers need feature flags and SDKs. Marketers need visual editors. Data scientists need advanced statistics. Most platforms force compromise.

Ask yourself: Can all my teams—engineering, marketing, product, etc., run experiments independently without breaking statistical validity?

What you need for scale:

| Capabilities | Why? |

| Feature flags | For progressive rollouts and instant rollbacks |

| Visual editor | With a WYSIWYG interface for no-code creation |

| CUPED | To enhance the sensitivity of A/B tests by reducing variance, enabling faster and more statistical significance |

| Sequential engine | To continuously monitor experiment results without inflating the false positive rate |

| Bayesian method | To power probability-based decision making Fixed Horizon (frequentist) for sample size estimation |

| Omnichannel capabilities | For consistent cross-platform experiences |

| No-latency SDKs | For performance-critical applications |

| Edge delivery | For flicker-free test delivery and faster page loads |

| Stats accelerator |

To target and test user segments |

| Global holdouts | To measure and report on the cumulative impact of your testing program |

See it work:

faster to launch A/B tests

quip wanted to empower all teams to run tests independently without relying on the digital team. Now the team is 40x faster to launch A/B tests with Optimizely Web than Feature Experimentation alone.

quip continues to focus on improving customer LTV whilst launching new and innovative products and expanding its horizons in the oral healthcare space.

Timothy P

Director of Digital Product, quip

4. Can you improve your team’s collaboration and eliminate workflow challenges?

There's often no structured spot for every person in the experimentation process to contribute and collaborate.

Think about how much time your team spends:

- Looking for that one great idea someone shared in last week's meeting

- Getting everyone together for brainstorming sessions across multiple time zones

- Following up on action items that got lost in email threads

- Waiting for feedback from stakeholders who couldn't make the meeting

Ask yourself: Does the platform provide structured workflows and visibility that eliminate bottlenecks in the experimentation process?

Pick a tool whose collaboration capabilities provide:

- Structured idea capture through submission forms that capture all important details upfront, replacing ideas lost in emails and Slack

- Automated workflows that notify the right people and route approvals, eliminating the need to chase stakeholders

- Centralized documentation where everything from ideas to results lives in one searchable place

- Kanban boards showing exactly which experiment is at which stage in development

Image source: Optimizely

Here's how you and your team can get more out of your day with a purpose-built collaboration tool.

See it work:

Chewy's experimentation team historically lacked visibility into their processes, limiting accountability and making it difficult to identify bottlenecks. Optimizely's collaboration solution helped them identify which phase of the workflow experiments were getting slowed down in and provided insights needed to improve experiment velocity.

Dan Waldman

Senior Technical Product Manager, Chewy

5. Can you personalize experiences at scale without developer bottlenecks?

Serving multiple audiences on one platform traditionally means building separate experiences for each segment. It's a resource nightmare that doesn't scale.

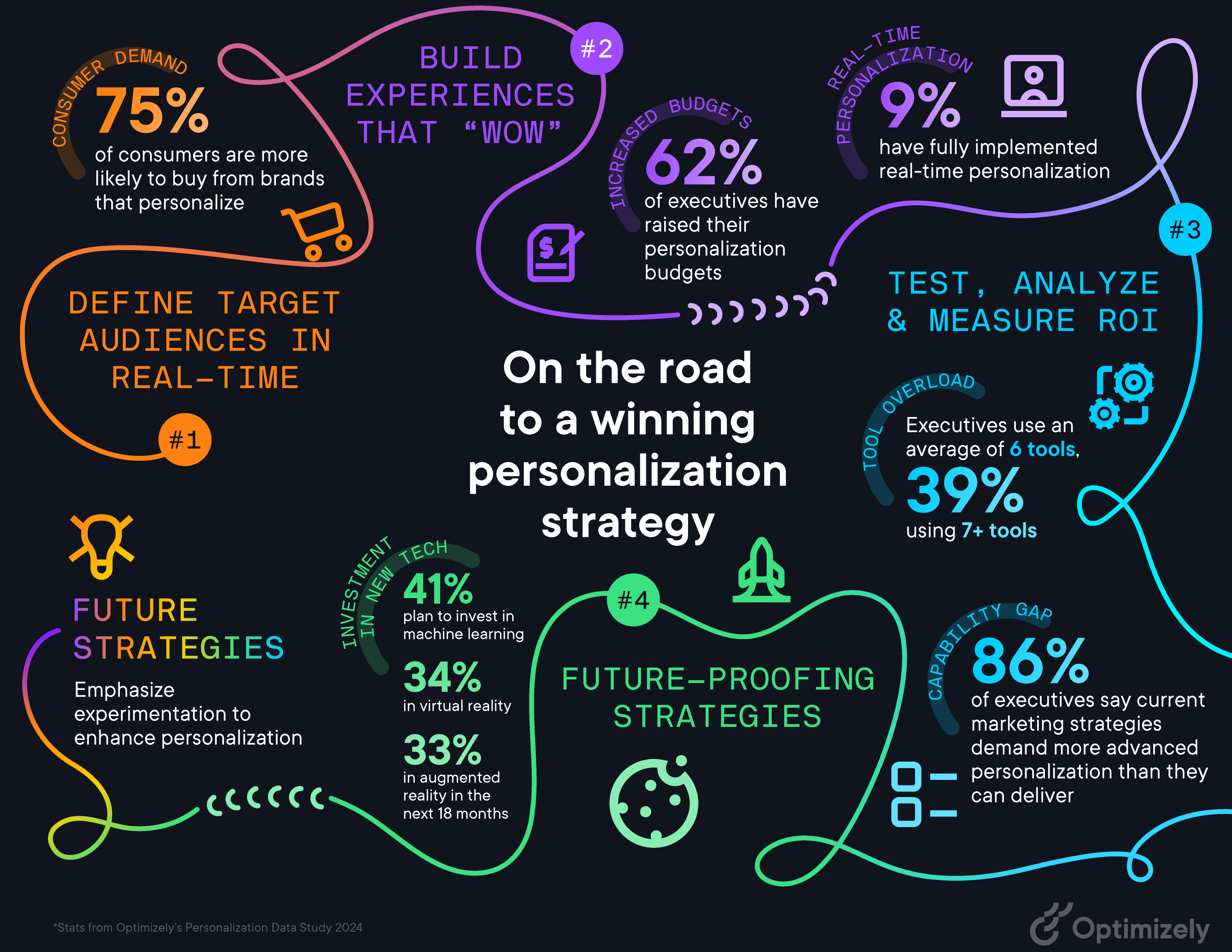

A solid personalization strategy customizes customer experiences in real-time based on user signals and behavior. Research from 127,000 experiments shows personalization generates 41% higher impact compared to general experiences.

Ask yourself: Can this tool help you personalize at scale?

Look for a tool that allows you to identify opportunities.

So you can:

- Segment users based on behavior patterns, purchase history, and engagement levels

- Map experiences that track customers through activation, nurturing, and reactivation

- Build personalized journeys with automated triggers and content delivery rules

- Create and deliver tailored messaging and experiences

Here's what you need to consider when you start to evaluate the countless platforms out there. Check out the free personalization buyer's guide PDF.

See it work:

Here's how Calendly personalizes experiences for 20 million users.

6. Can you enable self-service analytics without SQL dependency?

Despite heavy warehouse investments, teams wait weeks for basic reports. Analysis requires SQL or data modeling skills. As a result:

- Product and marketing users can't easily pull answers from the warehouse

- Analysts get buried in complex (and not complex) one-off requests that spawn endless follow-ups

True self-service means you can analyze trustworthy results on all your data.

Further, AI in analytics is helping too by removing the technical barriers that keep business users dependent on analysts, freeing up your analytics team to focus on strategic work instead of routine report requests.

Ask yourself: Can non-technical team members analyze experiments and pull insights independently without SQL knowledge?

See it work:

Chewy now defines metrics in its warehouse and brings their team closer to the data by creating a single source of truth.

Chewy

Lastly, every vendor will pitch you AI. Here's how to cut through the hype and understand what actually matters for your team.

7. AI: What’s real vs. marketing hype

We understand that AI-washing is a real concern. It's not quite as simple as switching on a new tool and watching productivity soar. Effective AI requires thoughtful integration into your existing workflows.

What's still marketing fluff:

- AI that claims to run experiments entirely for you

- "Smart" automation you can't validate

- Features requiring extensive new tracking

- AI replacing human expertise

So, don't start with AI. Start with your customers. Remove the challenges in their journey.

Here's how we're removing friction points in your existing workflow:

- Experiment ideation agent: Analyzes your website data, heatmaps, and user behavior to generate high-impact test ideas in minutes. No more blank whiteboard sessions.

- Experiment planning agent: Recommends metrics that will actually reach statistical significance based on your traffic, predicts realistic test duration, and creates complete test plans with proper guardrails.

- Variation development agent: Creates test variations with dev-ready code through a visual editor, eliminating the months-long wait for developer resources.

- Experiment summary agent: Translates complex test results into clear business insights in simple English.

Finally...

When evaluating AI, ask vendors:

- Show me exactly how AI saves time in my current workflow

- What decisions does it help make faster, with specific examples?

- Can I validate and understand every AI recommendation?

- Does it work with my existing data or require new tracking?

What platform capabilities should you evaluate beyond basic A/B testing?

Four criteria every buyer must consider:

1. Ease of use

brand_optimizatio

- Can non-technical team members set up experiments without assistance from developers?

- How long does it take to train someone on the platform?

This is often the make-or-break factor for organizational adoption.

2. Architecture & stability

- How does the platform handle high-traffic loads?

- What's the performance impact on page load times?

- Do you offer no-latency SDKs? How do you prevent flicker?

Performance issues can kill user experience and skew test results.

3. Statistical rigor

Beyond basic A/B testing, what advanced methods does the platform support?

Other test types that matter:

- Multivariate testing (MVT): Test multiple element variations simultaneously on complex pages

- Split URL testing: Compare completely different page designs with radical redesigns

- Server-side testing: Render variations of a test directly on the web server, before it is delivered to the client.

- 1:1 Personalization: Customize content for individual prospects in different user segments

- Real-time segmented testing: Target content by location, behavior, or time of day

4. Integration with your technology stack

Evaluate how well does this work with the tools you already use. Look out for integration capabilities:

- Does it work natively with Snowflake, Databricks, BigQuery, and Redshift?

- Can you connect experiment results to customer lifetime value and retention data automatically?

- How does this integrate with your current analytics, CRM, and marketing automation tools?

- Does it support your tech stack (CDPs, BI tools, marketing platforms)?

5. Avoid platforms that

- Require data exports: You'll create new silos instead of eliminating them

- Call it "self-service," but need SQL: Business users still wait for analysts

- Offer AI without workflow value: Ask for specific time saved, not demos

- Can't auto-connect tests to revenue: You'll celebrate clicks while revenue stays flat

Implementation timeline: What to expect

Start with Foundation (Days 0-30). Integrate warehouse infrastructure and connect tracking to business outcomes. Then, train core team and launch first revenue-tied experiments.

Next is scale (Days 30-60). Deploy self-service analytics across product and marketing. Then, implement advanced stats, personalization, and cross-team access.

Lastly, AI acceleration (Days 60-90). Enable AI-assisted creation and natural language querying. Then, embed experimentation in decision-making culture and measure ROI

Total cost considerations: Platform fees are the start. Expect 2-3x for integration and setup in year one, plus training, maintenance, and opportunity costs. See the Total Economic Impact™ of Optimizely One by Forrester Consulting for the full picture.

Bringing it all together

Your experimentation program is like an engine where all parts work together. Here's your checklist:

- Warehouse native analytics: track any metric in any experiment, regardless of how the metric was recorded.

- ROI: Measure what is relevant and can make a difference to your business.

- Scalability: Go big while achieving statistical significance

- Collaboration: Can you bring your closer to the data

- Personalization: Create meaningful 1:1 experiences your customers will love

- Self-service analytics: Analyze trustworthy results on all of your data from different channels

- AI: Get your data from a single source of truth. If your data is broken, AI just helps you be wrong faster.

- Implementation: Check the total costs of buying and running tests on an experimentation platform.

The difference isn't in the features. It's whether your experimentation platform is capable of revealing what matters to your business.

Ready to connect experimentation results to business outcomes?

Forget impressive demos. Check:

- Can your teams actually use what you're buying?

- Will your warehouse support what you're implementing?

- Are you solving real problems or buying future headaches?

Some teams need enterprise-grade scalability. Others need simplicity and speed. Many need to fix their data foundation before any platform will work.

The best platform is the one that works with the organization you have, not the one you hope to become.

Want to dive deeper?

- Free PDF: Web experimentation buyer's guide

- Free PDF: Feature experimentation buyer's guide

- Talk to Optimizely