Leveraging Interaction Effects in A/B and Multivariate Testing

At worst, interaction effects can make it difficult or impossible to interpret the results of concurrent tests. But interaction effects don’t have to be scary!

In this post for experimenters and analysts, I’ll examine:

- Interaction effects and how they work in the context of digital experimentation

- How multivariate tests can be used to find interaction effects and quantify their impact on experiment metrics

- How positive interaction effect can boost experiment performance

Multivariate tests are only available for Web Experimentation at this time.

What are interaction effects?

Before we get ahead of ourselves, let’s establish a shared definition. In statistics, an interaction is defined as “…a situation in which the simultaneous influence of two variables on a third is not additive.”1.

In the context of digital experimentation, we can see interaction effects when multiple experiments target the same users. The combination of experiment variations may alter their behaviors in surprising ways that we couldn’t anticipate just from looking at results for each experiment independently.

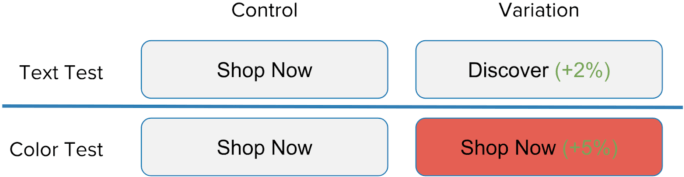

Consider an example of two A/B tests on a single Shop Now button. One test modifies button text, and the other modifies button color. You configure these tests to be mutually-exclusive, which means that users who participate in one test cannot be targeted by the other.

A series of two mutually-exclusive A/B tests on the Shop Now button.

After both tests have reached significance, you find that users who saw the “Discover” text variation clicked the button 2% more frequently, and users who saw the red color variation clicked the button 5% more frequently. You might assume that if you deploy the winning variations for both experiments, you will see an overall improvement of 7%. Because you are a careful experimenter, you decide to validate your assumption by testing the winning combination directly:

To your surprise, you find that visitors who are exposed to the combination of winning variations click the button 10% more frequently. Where is the “extra” 3% lift coming from? The answer is that you are seeing an interaction effect, where the performance of the combination of text and color is better than simply adding together the lift of both winning variations would lead you to believe.

Congratulations, you’ve just discovered a positive interaction effect!

Multivariate Testing

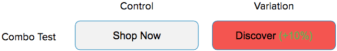

These same types of effects can be observed when running multivariate tests (MVT), where an array of section variations is blended together to produce combinations (check out our handy article on experiment types for more details). Here’s a quick primer on MVT terminology:

Those of you who are familiar with MVT were probably looking at the above example and thinking “hey, why didn’t we just run an MVT in the first place??” Well, you’re absolutely right! An MVT would be an ideal test setup to determine the best combination of text and color for our Shop Now button.

Running the above test as an MVT would have a few advantages:

- You can skip the initial run of two independent tests and go straight to testing combinations

- You can be sure that you find the best combination of variables, even when those variables tested independently don’t perform as well as they do together

Another way of stating advantage #2 is that your MVT results will include the impacts of interaction effects right out of the gate. In fact, the ability to detect interaction effects is the primary reason why savvy experimenters choose to run MVT as opposed to multiple overlapping A/B tests!

Finding Interaction Effects with Multivariate Testing

Ok, so let’s say that you’re ready to go hunting for positive interaction effects in your MVTs. What next?

Before you launch your test, consider if the MVT’s sections are likely to have an impact on each other. In other words, you’re more likely to see interaction effects when sections are tightly coupled (as in the button example above). While you could certainly use an MVT to determine the optimal combination of two unrelated variables (homepage hero image and number of items displayed on the homepage, for example), interaction effects are much less likely to occur.

After your test has been running for a while, you can dive into your results to look for interaction effects.

Introducing Section Rollups

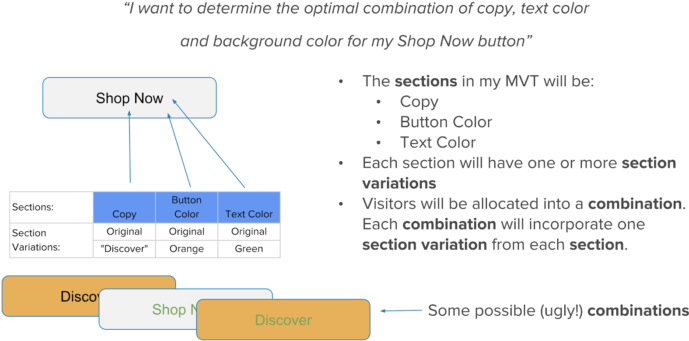

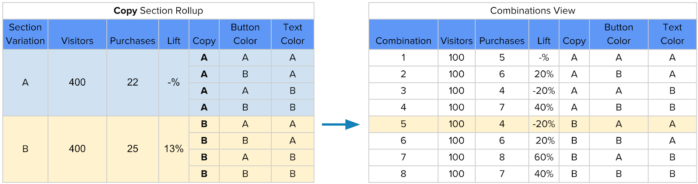

Section rollups is a new analysis mode which focuses on the contribution of specific section variations to the overall performance of the MVT. To produce a section rollup, metrics for all combinations which incorporate a specific section variation are aggregated together to produce a new view of results:

You can use section rollups as a heuristic for the presence of interaction effects in your MVT results. When looking at a section rollup, you’ll ask the question “Is the performance of this section variation better than what I would expect if I tested it in isolation?”

The good news is that if you’re doing a full factorial MVT (one where every possible combination is tested), you actually did test each section variation “in isolation” (i.e. in combination with the control variations for all other sections). In the above example, combination 5 blended variation B for the Copy section with the control variations for Button Color and Text Copy sections. The lift you measured in this combination represents the impact of displaying the section variation without making any other changes to the experience.

If the lift measured in the section rollup is much higher than the lift measured in the “isolation” combination, this means that the performance of other combinations is being boosted by positive interaction effects!

The Copy section rollup shows 13% lift for variation B. This is a lot higher than the -20% lift for combination 5. This means that combinations 6, 7 and 8 (the other combinations which use the B variation for the Copy section) are boosted by positive interaction effects!

This heuristic technique isn’t going to reveal 100% of interaction effects as certain patterns of effect distribution can be “cancelled out” in the section rollup view. Analysts who are looking for a more comprehensive way to detect and quantify interaction effects could consider using an ANOVA, or Analysis of Variance model. Optimizely’s results page doesn’t currently implement ANOVA, but if you’re interested in leveraging this technique you can consider exporting Optimizely event data and analyzing it using your stats package of choice.

Dealing with Negative Interaction Effects

Up to this point, we’ve talked mostly about positive interaction effects, but interaction effects can also negatively impact combination performance. Going back to our “Shop Now” button, let’s say we now want to determine the optimal combination of button color and text color. Our MVT layout looks like this:

We don’t even need to launch the test to know that the combination with red text on a red button is going to perform poorly!

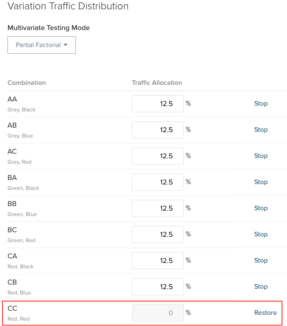

If you have reason to believe that a specific combination will suffer from negative interaction effects, you can prevent visitors from being exposed to that combination by using a partial factorial MVT.

We can easily stop all traffic to the red-on-red combination in a partial factorial MVT!

If you’re really concerned about interaction effects and want to prevent them altogether, another approach is to run multiple mutually-exclusive A/B tests. Using this method, you can prevent users from being exposed to multiple tests which are likely to interact with each other, or you can even limit users to participating in one test at a time. This reduces the risk of bad interactions, but it can really cut down on the statistical power of your tests as each one competes with others for traffic. You will also miss out on the opportunity to discover positive interaction effects. Learn more about how to run mutually exclusive tests with Optimizely.

What it all means for experimentation programs

Whew! We’ve gone pretty deep into interaction effects and how they work in A/B and multivariate tests. But what does it all means for your experimentation program? There are a few key points to remember:

- Interaction effects may be present whenever users are exposed to multiple variables, regardless of the test style.

- Planning around interaction effects (either by running MVT and disabling bad combinations, or by running mutually-exclusive experiments) is a good idea!

- Discovering positive interaction effects (is it too corporate to call them “synergies”?) is a primary motivator for running MVT.

Do you have a story about surprising interactions you’ve discovered through experimentation? We’d love to hear from you!

1. Dodge, Y. (2003). The Oxford Dictionary of Statistical Terms. Oxford University Press. ISBN 0-19-920613-9.

- Last modified: 4/28/2025 2:49:50 PM