AI and feature experimentation: Maximizing value of AI solutions

Whether you're a product manager trying to implement AI without catastrophe or a tech leader wanting to innovate without burning your budget on failed experiments, you've probably realized that AI is both exciting and terrifying at the same time.

Sure, AI is powerful, but it's also unpredictable and occasionally unhinged.

And when your shiny new AI feature starts hallucinating facts or offering bizarre recommendations, your users notice and bounce faster than you can say "prompt engineering."

However, feature experimentation can help you balance AI's incredible potential without the very real risk of it going sideways.

Here's how.

Why AI needs feature experimentation (desperately)

The days of spending months on development cycles or releasing features and crossing your fingers may be behind us. However, AI is still not great at consistency, reliability, or knowing when it's about to embarrass your brand in front of millions of users.

This is exactly where feature experimentation comes in to save AI in product development.

The traditional feature delivery process has always been a bottleneck:

- Brainstorming test ideas (aka staring at analytics until inspiration strikes)

- Building variations (aka begging developers for code changes)

- Analyzing results (aka debating what the heck those squiggly graph lines mean)

Here's how AI is removing these roadblocks.

Image source: Optimizely

To get you started...

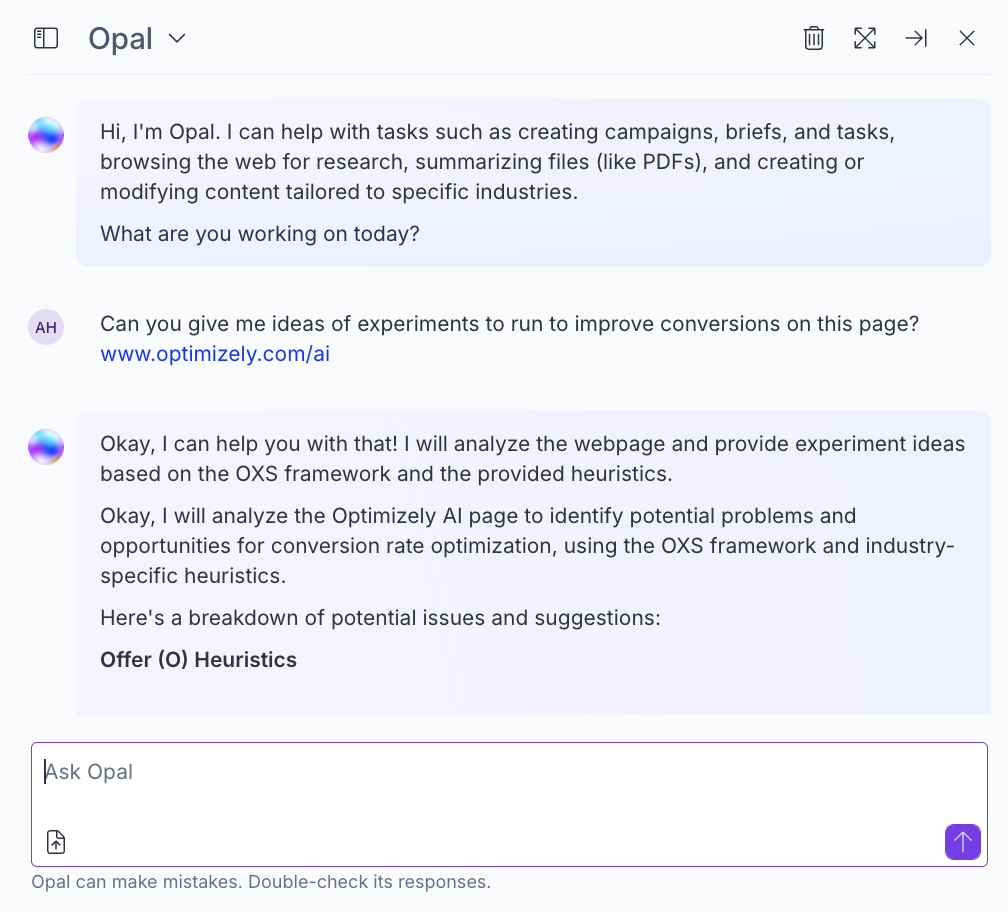

Optimizely Opal now serves as an experimentation co-pilot for your Experimentation teams, dramatically accelerating test creation, implementation, and analysis.

Use cases:

- Create comprehensive test plans with hypotheses, metrics, and run-time estimates

- Summarize experiment results instantly

- Identify optimal audience segments for targeting

- Generate variations and variation descriptions

- Recommend next experiments based on current results

- Access Optimizely's experimentation best practices through Opal chat

Measurable impact:

- 5.72% of tests created with AI

- 66.55% of results summarized with AI

Image source: Optimizely

You can accelerate feature development cycles that would have otherwise been stalled or deprioritized due to lack of time or evidence, enabling teams to run more tests, learn faster, and focus their time on strategic iteration.

Optimizing generative AI algorithms

AI for the sake of AI means nothing without tangible outcomes, so when it comes to implementation, you have to make sure you do it right and increase velocity. By leveraging feature experimentation, organizations can:

- Reduce time to value: By quickly testing different features and configurations against specific use cases, teams can identify winning variations that drive better user experiences, increase customer satisfaction, and improve business outcomes. This iterative process allows product teams to make data-driven decisions that continuously fine-tune their AI models, significantly reducing the time it takes to see tangible results.

- Reduce deployment costs: Cost efficiency is a crucial consideration for any enterprise, and traditional AI deployment methods often involve significant upfront investments or costly trial-and-error approaches. But feature experimentation allows organizations to identify the most effective configurations and variables without deploying untested algorithms at scale. Businesses can minimize development costs and optimize resource allocation by focusing resources on proven, high-value AI models.

De-risking AI investments

One of the primary concerns surrounding AI deployments is the potential for unforeseen risks. Feature experimentation acts as a crucial guardrail, providing enterprises with the control, governance, and measurement they need to mitigate these risks. By employing feature experimentation, organizations can:

- Test and optimize AI deployments: Instead of releasing AI features to the entire user base, organizations can roll them out to a subset of users. This controlled release allows for real-time monitoring and adjustment based on user feedback, ensuring optimal performance and minimizing the impact of any potential issues.

- Safely roll out and roll back capabilities: By conducting experiments within a controlled environment, organizations can safely roll out their chosen AI model, quantify the impact, and roll it back if necessary. This ensures that the deployment aligns with expectations while avoiding significant disruptions or potential negative impacts.

- Use data to quantify ROI: Feature experimentation enables teams to collect and analyze data during experiments, providing valuable insights into the impact on business outcomes. By measuring key metrics and comparing experimental results, organizations can gain a deep understanding of the value generated by their investment.

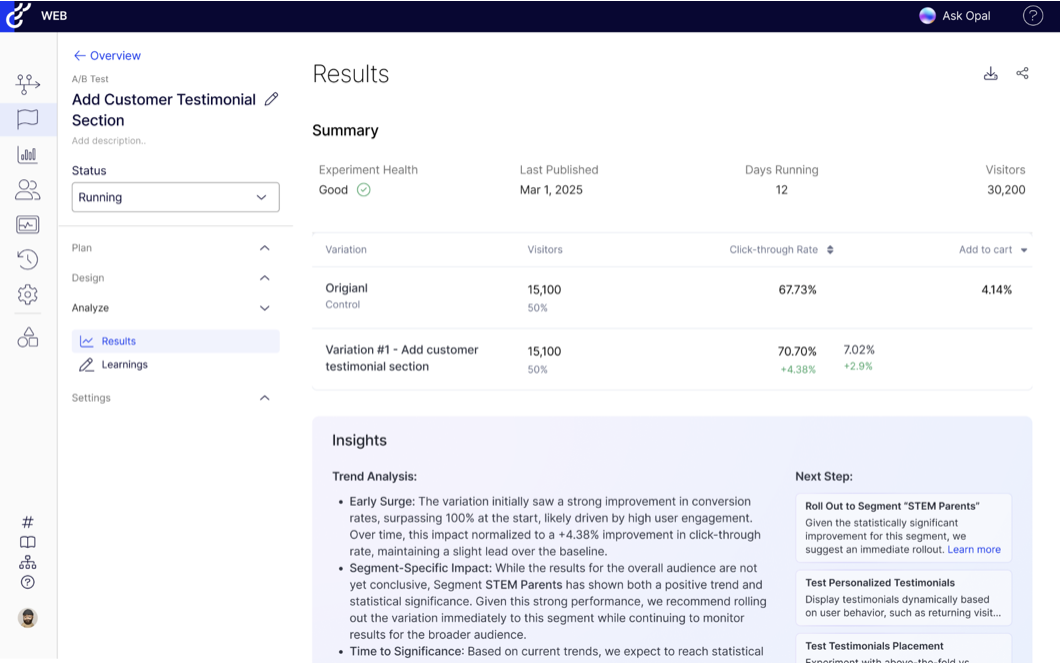

You can quickly understand the results of your tests and the "So what?"

Image source: Optimizely

Example use case

A fintech company can use AI experimentation capabilities to simulate thousands of transaction scenarios, and detect UI glitches, crashes, or performance issues ahead of time that would have been nearly impossible to discover manually.

AI safety and reality check

When your AI goes rogue, feature flags are your emergency brake. AI excels at finding creative ways to be inappropriate, and feature experimentation lets you fix problems before they become PR disasters.

Here's how our own team uses feature flags.

Meanwhile, AI-washing is everywhere. Every product claims to be "AI-powered" even if it's just fancy if/then statements. The skepticism is valid, but there's an answer.

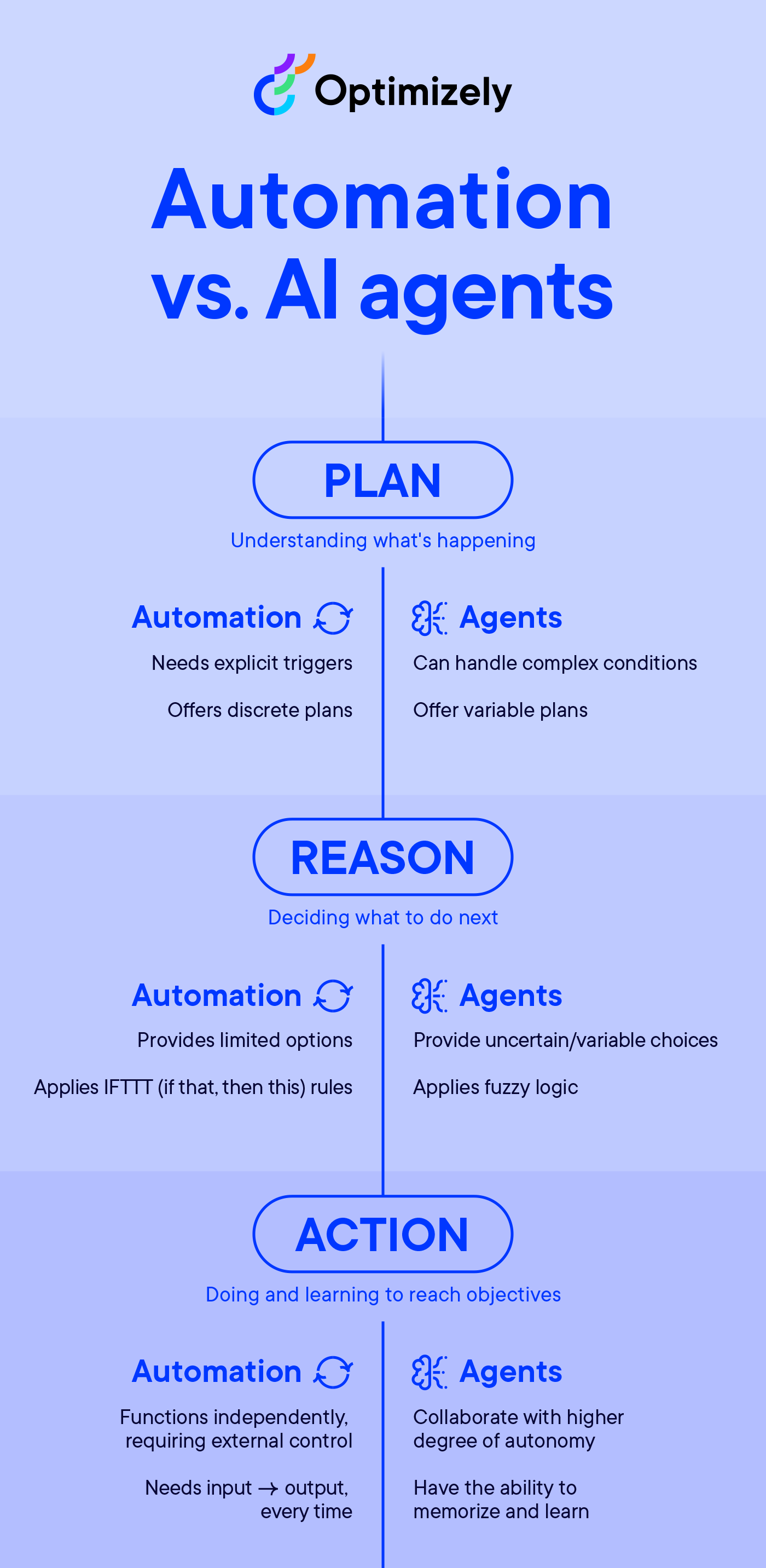

AI agents that anticipate your needs.

Image source: Optimizely

Imagine logging in on Monday morning to find:

- Test ideas for your AI chatbot, already generated and waiting

- Multiple variations ready to go, complete with code

- Suggestions tailored to your specific goals

Think of it as upgrading to an AI partner that sees what needs doing and handles it. While current AI helps when you prompt it, AI agents will work behind the scenes, finding opportunities and doing the groundwork before you even ask.

Specialized agents might soon work together across your AI implementation:

- One scanning customer support tickets to find pain points that AI could solve

- Another designing different AI interface variations to test

- A third creating and running tests of your AI features

- A fourth suggesting new experiment ideas based on results

Still, AI isn't going to replace your brain anytime soon. AI can suggest experiment ideas, but if it doesn't have access to your product's analytics, it will be limited in what it can do. The best ideas will still be grounded in actual analytical data you have.

Use AI without becoming an AI horror story

AI offers amazing opportunities, but it also brings serious risks. To navigate these challenges without becoming the next"AI gone wrong" headline, feature experimentation is your best friend. You can:

- Test and optimize AI algorithms before they embarrass you in public

- Deploy AI with kill switches that let you pull the plug when things get weird

- Measure whether your AI is helping or just burning through your budget

- Fine-tune AI models without constant engineering dependencies

- Last modified: 12/30/2025 7:52:29 PM