How OpenDoor improved page load speed with Experimentation using Cloudflare and Optimizely

Josiah Grace

At Optimizely, we love being able to highlight innovative use cases by our customers to help the community learn new ways and approaches for experimentation. Opendoor documented and shared an amazing use case about how they leveraged Optimizely and Cloudflare to run incremental experimentation and get data driven about determining the best landing pages. After the original medium post caused a lot of excitement here at Optimizely, we reached out to Opendoor who gave the greenlight to re-share, thanks to Josiah at Opendoor for the great write-up!

This is the first in a series highlighting Opendoor’s use of Cloudflare Workers. If at any point you want to jump straight to working code, it is available here (and in the implementation section).

Opendoor is coming up on two years of running Cloudflare Workers in production. Cloudflare Workers are a serverless runtime, which allow for a bit of Javascript to intercept, execute, and modify incoming requests at the CDN level (see Ashley Williams’ talk at JSConf EU for an excellent overview). Workers are lightweight enough to run before every request and provide powerful building blocks to perform common operations like proxying, setting headers, and the like. At Opendoor, workers were originally deployed to handle a single use-case, proxying requests from opendoor.com/w/* to our internal WordPress instance. Our worker allows for bundling of required npm packages, has full test coverage, and is actively iterated on by developers at Opendoor every day. (Bonus! It’s written in Typescript, and Opendoor ❤s Typescript). Over time, their utility, ease of use, and continually expanding capabilities led to an explosion of use-cases; they now run before every request to an Opendoor web property.

Cloudflare Workers power our landing page infrastructure and enabled us to:

- Run an A/B test across old and new infrastructure

- Run an A/B test across two separate landing page designs

- Run multiple A/B tests on different variations of our new design

- Simplify developer experience on the new infrastructure

All with a pretty small amount of experimentation/routing code. We hope you all are as excited as we are about this approach and the power of workers.

Background

At the beginning of 2019, our landing pages were starting to show their age. They were difficult to iterate and experiment on, loaded slowly (especially on mobile devices), and needed a design refresh. We set out to address these issues and boost our conversion (while measuring everything along the way). After our initial investigation, we decided that we were going to:

- Rewrite our landing pages with a new infrastructure/framework (optimized for page-speed improvements, in this case, nextjs) with the existing design and roll this out (ensuring conversion was flat or positive).

- A/B test the new design vs. the old with the new infrastructure

- Run multiple experiments on the winning variation to boost conversion

All of these steps were ordered such that we would first validate our new infrastructure, then our new landing page design, and finally, experiment on variations of the winning design.

Architecture

There are a couple of high-level steps when a request comes in:

- Identify the user (or generate an identity if they’re a new user)

- Choose the experiment group the user is assigned to (or was previously assigned to), and satisfy their request for that content

- Log the user and variation for use in conversion analysis

The two commonalities across all experiments are the experimentation platform (Optimizely) and a distinct user id (held in a first-party cookie on the opendoor.com domain). The three A/B tested domains are infrastructure, design, and variation. Each of these need to be rolled-out, measured, and either A or B is declared the winner. We can not mix and match these three categories or there are too many variables to isolate in analysis. In order to accomplish these goals, we have to decide where the shared infrastructure lives.

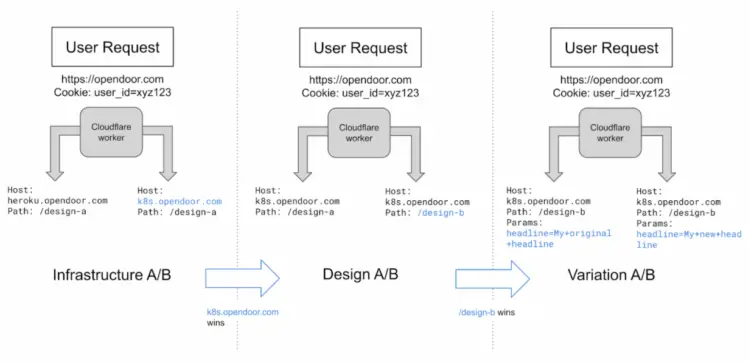

Our first experiment domain is the old vs. new infrastructure. These are two separately deployed services, the first is served from our heroku deployed rails monolith, the second is a kubernetes hosted node service running server-side rendered nextjs — these differ by host (e.g. heroku.opendoor.com vs. k8s.opendoor.com). Next, our design test happens on the selected serving infrastructure. Each design variant will be on the same host but won’t share much code. Finally, we’ll test variations on the winning design (headlines, calls to action, and the like). These will be mostly the same code with conditional variations. We have two options here, given these requirements.

Option 1: Experiment logic in application code

If the experiment logic lives in the application code, then an infrastructure test would not work, as we’d have to wait until a backend was fulfilling the request before determining which infrastructure to use to fulfill it. If we’re on new infrastructure, we could assign in application code, but then we couldn’t cache requests to our backend, because we’d have to hit our upstream to determine the experiment group. Developers would also have to fake user information and experiment groups while developing.

Option 2: Experiment logic before hitting application code

If the experiment logic runs before application code, we are able to cache requests to our backend and determine which infrastructure to hit before being in application code. This introduces the requirement that our request URL is the only data dependency for the backend. This requirement also simplifies developer experience, as there’s no need to mock or fake experiment groups while developing. The nextjs backend doesn’t even know who the user is, it renders solely based on the request URL.

Decision: Option 2

Since Cloudflare Workers can run before every request, fetch different backends, read and set user cookies, and load and run the Optimizely Javascript sdk, we used them to implement option 2. When a request comes in, we check a user’s experiment groups then format an upstream request with the worker. The worker changes the host for an infrastructure test, the path for a design test, and query parameters for a variation test. Since the request URL is the only data dependency, this is also all cached at the edge.

Visualization of selected architecture (option 2)

Implementation

On each request for a landing page, our worker does the following:

- Match the request against the landing page path (GET + $landing_page_path)

- Parse the “anonymous_id” cookie (generate a random uuid if it isn’t present)

- Check what experiment group the user is in (this is a stable assignment based on user-id)

- Fetch the content for the url associated with that group (and return the response to the user, usually cached)

- Log the experiment assignment

A full working example of the concepts in this blog post (including sample infrastructure, design, and variation tests) is available as a Cloudflare Workers template using the following commands. This includes all the configuration necessary to integrate Optimizely with a worker (including a working webpack config, bundled libraries, and cookie management):

An simplified example of the worker code for this is as follows:

import { createInstance } from "@optimizely/optimizely-sdk";

import cookie from "cookie";

import uuid from "uuid";

addEventListener("fetch", (event) => {

event.respondWith(handleLandingPageRequest(event.request));

});

async function handleLandingPageRequest(request) {

const cookies = cookie.parse(request.headers.get("Cookie")) || {};

const userId = cookies["anonymous_id"] || `${v4()}`;

const datafile = await fetch(

"https://cdn.optimizely.com/datafiles/OPTIMIZELY_CDN_FILE.json"

).then((res) => res.json());

const optimizely = optimizely.createInstance({ datafile: datafile });

const variation = optimizelyClient.activate(

"landing_page_infra_test",

userId

);

let response;

if (variation === "control") {

response = await fetch(`https://heroku.opendoor.com/design-a`);

} else {

response = await fetch(`https://k8s.opendoor.com/design-a`);

}

// make headers mutable

response = new Response(response.body, response);

response.headers.set("Cookie", cookie.serialize("anonymous_id", userId));

return response;

}If you have any questions or feedback, feel free to open up an issue on the linked repository or reach out on twitter (@defjosiah).

Takeaways

In the end, we managed to successfully run all of our intended A/B tests on this new landing page infrastructure. The Cloudflare Worker logic has been rock solid (partially due to our testing strategy), and we haven’t run into any production issues. Developers love developing against this service and adding new features and experiments is easy (mostly due to the request URL requirements). The focus of this post is not the actual conversion results; but we managed to successfully boost conversion with the new infrastructure (due to page-speed improvement), release a new landing page design, and finally run a number of successful variation experiments. Compared to implementing a one-off proxy server (nginx or similar) with these features, Cloudflare Workers are a breeze.

Stay Tuned

Our overall workers architecture, testing, deployment, and implementation deserves its own blog post. We also use workers for serving organic content, first-party cookie management, user-agent differentiated responses, “serverless” lambda functions, localization, and frontend application serving. We’d like to share these use-cases with you all, we love workers and think you will too!

Ready to get started with server-side experimentation? Reach out to us today about Optimizely Full Stack or sign-up for a free Rollouts Account.