AI and feature experimentation: Mitigating risk and maximizing value of AI-driven solutions

Elyse Dunbar

In just a few months, artificial intelligence (AI) has taken the world by storm, becoming (arguably) the most prominent buzzword of the year. And as organizations seek to enhance their products, processes, and customer experiences, the potential of AI has garnered significant attention.

However, with a great potential comes valid concern, and enterprises are rightfully cautious about the possible pitfalls and risks associated with deploying AI, such as technical limitations, lack of human touch, misinterpretation, and integration challenges to name a few.

The solution: feature experimentation. It serves as the perfect tool for enterprises to:

- Quickly optimize generative AI algorithms

- Gain control, governance, and measurement of AI investments

Let’s dive in.

Optimizing generative AI algorithms

AI for the sake of AI means nothing without tangible outcomes, so when it comes to implementation, you have to make sure you do it right. By leveraging feature experimentation, organizations can:

- Reduce time to value

By quickly testing different features and configurations against specific use cases, teams can identify winning variations that drive better user experiences, increase customer satisfaction, and improve business outcomes. This iterative process allows product teams to make data-driven decisions that continuously fine-tune their AI models, significantly reducing the time it takes to see tangible results. - Reduce deployment costs

Cost efficiency is a crucial consideration for any enterprise, and traditional AI deployment methods often involve significant upfront investments or costly trial-and-error approaches. But feature experimentation allows organizations to identify the most effective configurations and variables without deploying untested algorithms at scale. Businesses can minimize development costs and optimize resource allocation by focusing resources on proven, high-value AI models.

De-risking AI Investments

One of the primary concerns surrounding AI deployments is the potential for unforeseen risks. Feature experimentation acts as a crucial guardrail, providing enterprises with the control, governance, and measurement they need to mitigate these risks. By employing feature experimentation, organizations can:

- Test and optimize AI deployments

Instead of releasing AI features to the entire user base, organizations can roll them out to a subset of users. This controlled release allows for real-time monitoring and adjustment based on user feedback, ensuring optimal performance and minimizing the impact of any potential issues. - Safely roll out and roll back capabilities

By conducting experiments within a controlled environment, organizations can safely roll out their chosen AI model, quantify the impact, and roll it back if necessary. This ensures that the deployment aligns with expectations while avoiding significant disruptions or potential negative impacts. - Use data to quantify ROI

Feature experimentation enables teams to collect and analyze data during experiments, providing valuable insights into the impact on business outcomes. By measuring key metrics and comparing experimental results, organizations can gain a deep understanding of the value generated by their investment.

Example use case

Let's consider a practical scenario where you’re a large retailer wanting to launch a new generative AI chatbot. You've got quite a few questions to consider:

Should we use GPT-3 or GPT-4?

Should we train and finetune it with additional context from, say the support base?

How can we test different models, variables, and parameters before deploying?

How do we measure the value of incorporating generative AI in our customer experience?

Would support tickets go down? Would bot responses actually be helpful for our customers?

With Feature Experimentation, your organization can:

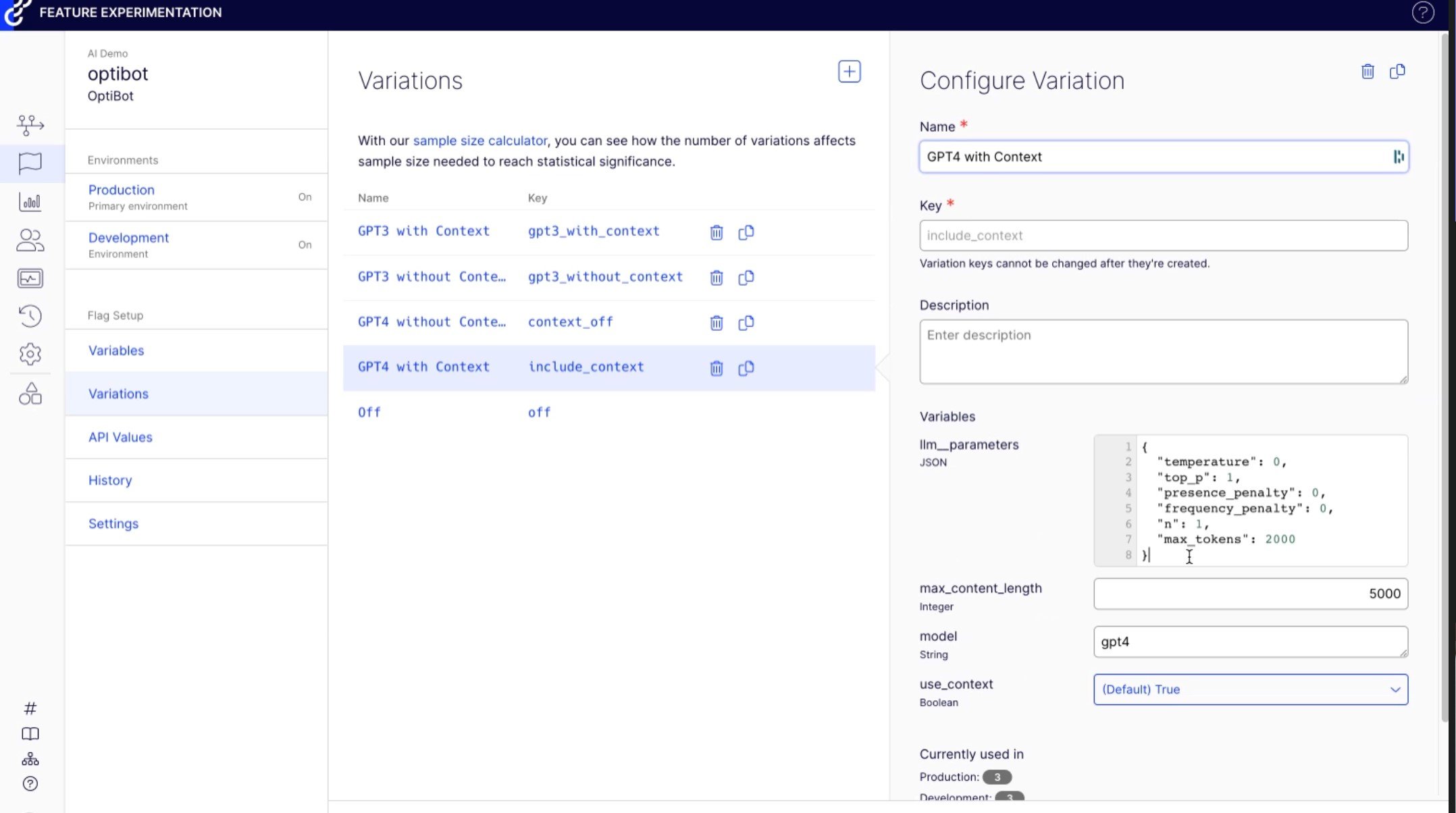

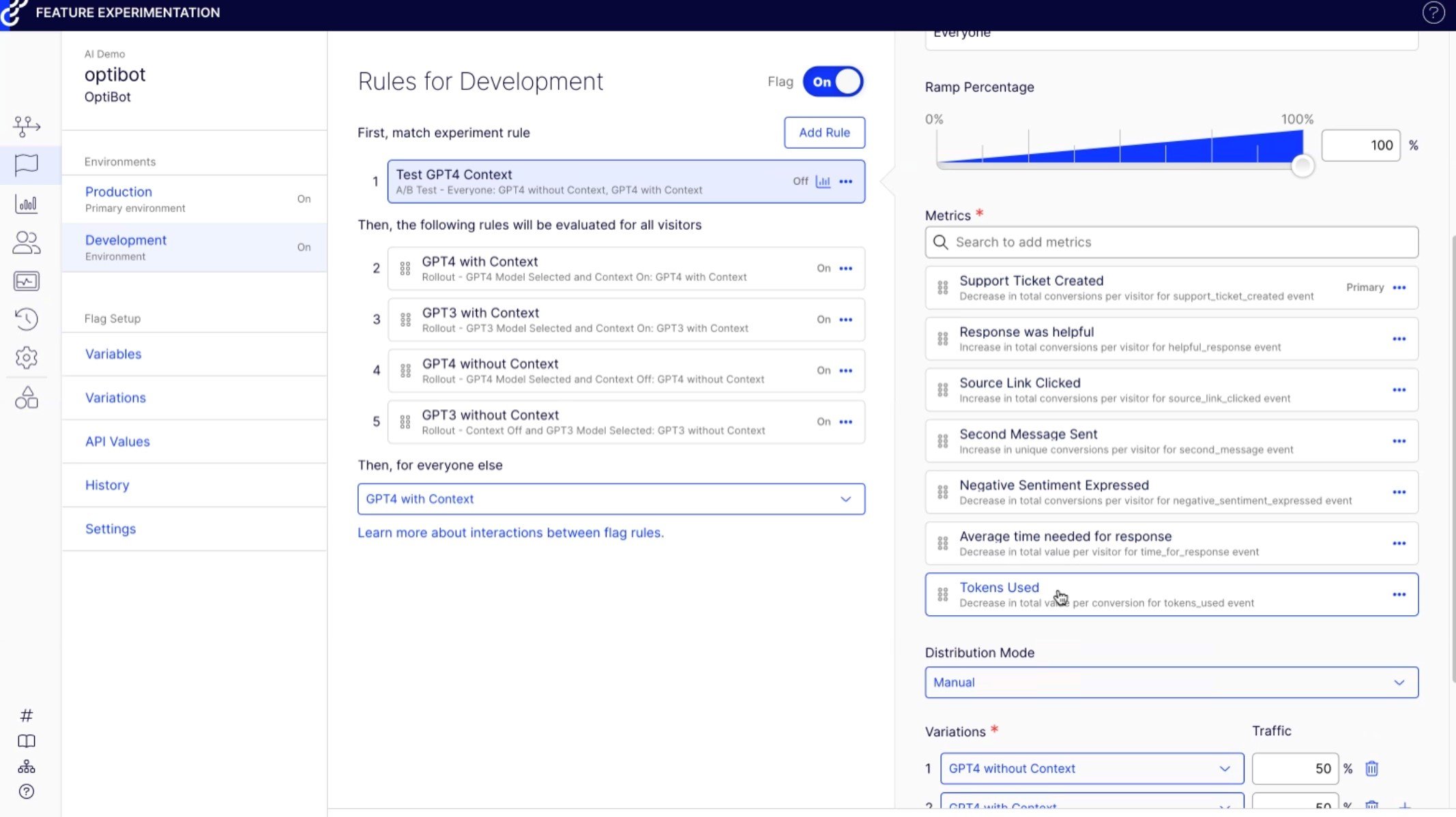

- Test different GPT algorithms against each other as well as specific configuration within the algorithm.

- Quantify different rollouts and measure impact against business metrics such as support tickets created, user sentiment, etc.

Conclusion

Generative AI presents tremendous opportunities for organizations, but it also brings inherent risks. To navigate these challenges effectively, feature experimentation acts as a safe pair of hands. By leveraging experimentation, organizations can optimize their generative AI algorithms, de-risk their investments, and measure outcomes to make confident, data-driven decisions.