Split testing

Split testing simplified

Split testing (also known as A/B testing) is a method of conducting controlled, randomized experiments to improve a defined metric, usually related to conversion rate optimization (CRO) and safely releasing new products.

Unlike multivariate testing, split testing uses a control and usually a single variation. For example, you might use split testing to explore the difference in performance between two different headlines on a landing page.

During the test, you’ll split website visitors or product users into two groups. You’ll direct half to your control version and the other half to the variation. From here, you can measure the impact the different versions have on your defined metric and see whether the difference produced is of statistical significance difference.

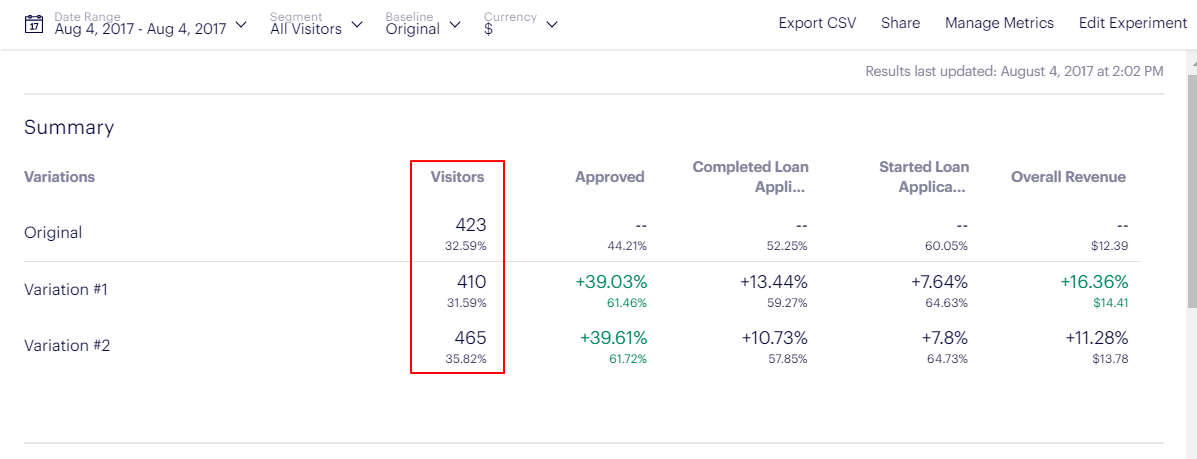

Image source: Optimizely

Split testing is equivalent to performing a controlled experiment, a methodology that can be applied to more than just web pages. The concept of split testing originated with direct mail and print advertising campaigns, which were tracked with a different phone number for each version. You can deploy a split test for banner and text ads, television commercials, email subject lines, new product features, UX changes, and so much more.

Split testing tools allow for variations to be targeted at specific groups of visitors, delivering a more tailored and personalized experience. The web experience of these visitors is improved through testing, as indicated by the increased likelihood that they will complete a certain action on the site.

![]()

Image source: Optimizely

How can you use split testing on your website?

Marketers, UX teams, and web development teams see the power of split testing across virtually every impactful element on a homepage or across the website.

Seemingly subjective choices about web design or content can become objective, data-driven decisions using split testing because the data collected from experiments will either support or undermine a hypothesis on which design will work best.

Within web pages, nearly every element can be changed and then understood via a split test. Marketers and web developers find split testing has a significant impact on choosing:

- Visual elements: pictures, videos, and colors

- Text: headlines, calls to action and descriptions

- Layout: above the fold content, button color, text, or placement, signup forms

- Ecommerce elements: pricing, limited offers, additional value

- Visitor flow: how a website user gets from point A to B

For example, testing changes made to an online checkout flow would help to determine what factors are most likely to increase conversions from one page to the next. Ideally, these will lead to increased orders or increased order value for the website owner.

The power of experimentation isn’t just limited to CRO on web pages. Marketers also use split testing for:

The power of split testing for product and product development teams

Split testing isn’t limited to digital marketing methodology. It can and should be used by product managers and product development teams as to test a new version of any meaningful change they intend to ship.

The power of split testing within product development is in providing a tool for truly user-centric decision-making. Just as once subjective decisions about marketing images or headlines can now be made with data, so too can changes to UX or functionality be made based on user behavior.

In addition to keeping the user at the center of the product, experimentation methods like split testing also help you mitigate the risk of making changes by testing them early and continually improving them. They allow you to build, deploy, test, and improve quickly and more importantly communicate appropriately.

You can use split testing for:

- Developing and updating user flows

- Testing the impact of product messaging

- Understanding the demand for a new feature

Best practices for split testing

The split testing process doesn’t need to be complicated or time-consuming. All you need to successfully run a split test is a hypothesis, the correct sample size, and a split-testing tool to collect the data on your behalf.

Once you have these four key elements, you can then refine your experimentation process with best practices:

- Choose specific metrics in your hypothesis - Experiment using specific CRO metrics that matter most to your goals (e.g., bounce rate, user path, click-through rate, form conversions, etc.).

- Keep the focus on your target audience - The best results come from the most relevant data, so be sure you’re targeting the right demographics for your target audience rather than casting a wide net. For website traffic, this means dividing traffic by the most relevant demographic, which may be geographic location, age range, or another pre-defined metric that matters most to you. Within a product, you might segment by user persona, geographic location, or even by selecting power users.

- Aim for the global maximum - Test with the overarching goal of the website or product in mind, not the goals of individual pages or features. Too much focus on one element creates a lopsided effect. For example, endlessly testing a home page without considering other important pages, like product pages, can dramatically impact the user experience by disrupting the user journey from landing on the site to converting. Make testing changes consistently throughout the visitor flow to improve conversions at every step of the process. It helps to build out a template for testing.

- Sample size estimation - When test planning, it is important to know if your test has adequate data to reach any conclusive results.

Habitual testing helps to build a culture of data-informed decision-making that takes into account audience preferences. Each click on a website or action taken within a product is a valuable data point. Conflicting opinions can be put to the test with split testing methodology, and its website visitors and users who should inform the final decision on the "best" design.

![]()

Image source: Optimizely

Do you need split testing tools to run experiments?

Split testing tools allow for variations to be targeted at specific groups of visitors, delivering a more tailored and personalized experience. The web experience of these visitors is improved through testing, as indicated by the increased likelihood that they will complete a certain action on the site.

Split testing tools are now often built right into your other marketing tools. However, you can also build a DIY system for your website or landing page using the tech and data stacks you already have, like Google Analytics 4 or heatmap tools like Hotjar.

Investing in a dedicated split testing tool can help you build a wider culture of experimentation through the power of automation, templates, and an overall easier testing process. Optimizely Web Experimentation is a tool that makes creating, running, and reporting split tests easy.

Are you ready to dive deeper into the power of split testing? Download our report featuring 37 winning split-testing case studies to see how Optimizely customers have improved their CRO through the power of experimentation.